Howdy, Stranger!

We are about to switch to a new forum software. Until then we have removed the registration on this forum.

Categories

- All Categories 25.7K

- Announcements & Guidelines 13

- Common Questions 30

- Using Processing 22.1K

- Programming Questions 12.2K

- Questions about Code 6.4K

- How To... 4.2K

- Hello Processing 72

- GLSL / Shaders 292

- Library Questions 4K

- Hardware, Integration & Other Languages 2.7K

- Kinect 668

- Arduino 1K

- Raspberry PI 188

- Questions about Modes 2K

- Android Mode 1.3K

- JavaScript Mode 413

- Python Mode 205

- Questions about Tools 100

- Espanol 5

- Developing Processing 548

- Create & Announce Libraries 211

- Create & Announce Modes 19

- Create & Announce Tools 29

- Summer of Code 2018 93

- Rails Girls Summer of Code 2017 3

- Summer of Code 2017 49

- Summer of Code 2016 4

- Summer of Code 2015 40

- Summer of Code 2014 22

- p5.js 1.6K

- p5.js Programming Questions 947

- p5.js Library Questions 315

- p5.js Development Questions 31

- General 1.4K

- Events & Opportunities 288

- General Discussion 365

In this Discussion

- Chrisir April 2018

- jb4x April 2018

- jeremydouglass April 2018

- koogs April 2018

- Rayle April 2018

Use 2D array to find coincidences of words

I am triying to discover how many times words from a list appear together in the sentences of a given text. The first thing I did was to find the sentences with a particular word (law in the example below), make an array with them, and count the times the other words appear with an IntDict.

This is the code

StringList results;

IntDict coincidence;

void setup() {

String [] text = loadStrings("text.txt");

String onePhrase = join(text, " ").toLowerCase();

String [] phrases = splitTokens(onePhrase, ".?!");

String search = "\\blaw.?\\b";

String [] wordList = loadStrings("wordList.txt");

for (int i = 0; i<phrases.length; i ++) {

String [] matching = match(phrases[i], search);

if (matching != null) {

results.append(phrases[i]);

}

}

String [] resultsArray = results.array();

String joinResults = join(resultsArray, " ");

String [] splitResults = splitTokens(joinResults, " -,.:;¿?¿!/_");

for(int i = 0; i < splitResults.length; i++) {

for (String searching : wordList) {

if (splitResults[i].equals(searching)){

coincidence.increment(splitResults[i]);

}

}

}

But if I want to count how many times each word of the list appears together with the other ones, I need to remake the process a lot of times.

I tried with Array[][] but it doesn't work. Now I am stuck with the problem and I don't know how to proceed. I would appreciate any idea.

StringList list;

String [] search;

void setup() {

String [] text = loadStrings("text.txt");

String onePhrase = join(text, " ").toLowerCase();

String [] phrases = splitTokens(onePhrase, ".?!");

Table table = loadTable("listOfWords.csv", "header");

for (int i = 0; i <table.getRowCount(); i ++) {

TableRow row = table.getRow(i);

list.append(row.getString("Word"));

search = list.array();

}

for (int i = 0; i < phrases.length; i ++) {

for (int j = 0; j < search.length; j ++) {

String [][] matching = matchAll(phrases[i], search[j]);

printArray(matching);

}

}

}

Tagged:

Answers

please post the data files you are using

you wrote

This means you need to count something.

The rest is quite unclear.

You mean one word? Or two? Or all of them?

You mean in the order they have in the list or in just any order?

so given text might be The law helps society.

word list: law society ?

Count this or not?

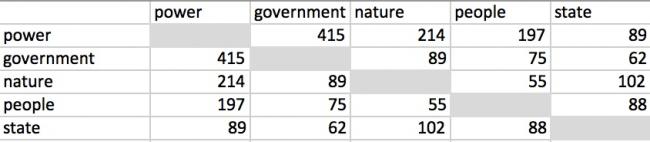

Thank you Chrisir. I am sorry for not to be clear enough. The idea is to know how many times a word, like law, appears in the same sentece with the other words: the co-ocurrences in the sentences. Something like this:

I have, for example, this list of words in a csv file with the times they appear in the full text.

With the first code it is possible to know how many words from the list appears in the same sentence as "power" (for example), and how many times:

If I want to know how many words of my list, and how many times, they appear in the same sentence as the word "people", I have to run the code again, changing in the

Stringsearch the word power for people, and so on. It is boring and not very practical. So I was thinking how to do the same thing for all the words in the list.The text I was experimented with is The second Treatise of Government, downloaded from here: gutenberg.org/cache/epub/7370/pg7370.txt.

This is the list of the first 20 most frequent words:

not sure, but the technical solution is to go over the "list first of the 20 most frequent words" in a nested for loop:

this means the outer for loop calls the inner for loop

that means each word is checked against each other word

the equivalent visual is a grid like chess board (or your result table above) where you visit each field once 1 2 3 4 5 6 ...

the function occurTogetherInOneSentence returns a boolean value; it receives 2 Strings. It for loops over all sentences and checks both words in this sentence (e.g.

if (sentence[i].contains(str1) && sentence[i].contains(str2) ) return true;resultTablecontains the values above like 415,214....define it before

setup():int [][] resultTable = new int [20][20];I think that can be improved on - looping over all the sentences 400 times can't be the most efficient way.

My mind says hashmaps. But it's 8am on a bank holiday so that's all it says at the moment.

For each sentence, find the popular words that the sentence contains, increment the relevant counts... That last bit may be non trivial.

ah, true

I solved this with the 2d array

resultTablemy minds screams hashMap all the time too but we can leave that for later.

(my non trivial worry was that you might have 5 matches in a sentence and working out all the pairs of those things in order to increment the correct counts. But, yeah, it's just a nested loop over the same list twice)

It was more obvious at 9:30 than it was at 8

Thank you very much Chrisir and koogs. Your ideas are very inspiring and a nice solution I did not think about. I will try with the 2d array before trying with a hashMap.

Thank you again for your valuable ideas. This is the final code:

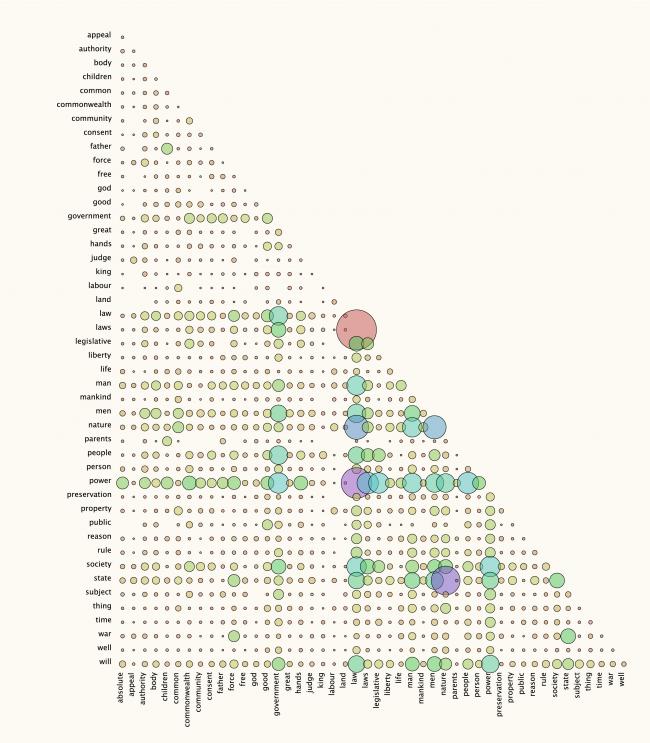

And this is the nice output:

What are lines 44 and 45 doing? In fact, what is lines?

Lines 37, 41 and 42 look pointless

it's true, 37/40/41 can be optimized. My bad.

You are right, koogs, lines 44 and 45 are the remains of an attemp to write a code to save the 2D array to a csv file. I forgot to delete them.

Well done!

Good point

Also the diagonal of the table should be empty

power shouldn’t be checked against power

the sentence splitting code also doesn't handle abbreviations, like "i.e." and "U.S."

As usually you both are going straight to the point. I was thinking how to avoid the problems you mention. For the first one (government contains men, etc.) I think it is possible to use a regular expression with

matchfunction (lines: 38, 39, and 59). Also, we can erase abreviations like i.e., or U.S. to avoid the problem when we are splitting the string. I think it is not an optimal solution, because "i.e." is not ver significant, but U.S. could be important. Anyway: there is an english stopwords list to remove the abreviations (lines: 22, 26, 42-51).Of course, the diagonal is interesting. In fact we only need half of the square matrix (the upper or the lower half). If we put 1 in the diagonal values and transform the half of the matrix into the inverse of the other half, we obtain a positive reciprocal matrix (I have to confess I don't know how to make this in the for loop, so I use a trick in line 97). If we think about the co-ocurrencies as weights, it is possible to calculate the eigenvector of the matrix and compare this vector with that from other texts or authors. The outputs could be interesting.

Thank you for your useful comments. It is very kind of you.

Here is the new code:

Another approach from building your own in Java:

If you are planning on also doing other language processing operations other than building a co-occurrence matrix, it might be worth developing in Processing.py (Python mode) so that you can use NLTK.

For example:

Note in particular the discussions of spare matrix approaches vs. dense matrix approaches.

Great idea. Thank you jeremydouglass.

For the upper right of the matrix only you can start the inner loop at the diagonal (or just beyond it). Basically, if m > n then you've already counted n,m so don't bother with m,n

Thank's koogs. I didn't think about it. It's easy.

I'd test match() in isolation too, I'm not sure it doesn't work just the same as contains() in the way you are calling it.

Thank's for the suggestion. I tested it with a short text, and it seems to work well. Words like punishment or government are not counted as men.

The last message I wrote with the loops is all wrong, those two lines should be the i and j loops, not the a and i loops (typing on phone is tricky...)

Thanks everyone for your help. I think the final result is nice.

Beautiful work!

(Should law / laws be lemmatized into one entry, or are they conceptually distinct here?)

Thank you, jeremydouglass. Usually, in this text (the Second Treatise of John Locke) when the author says law, he is talking about the natural law. The "laws" are those promulgated by the government. So I thought it was interesting to keep both terms.

@Rayle -- Very interesting. Some of your most common sentence co-occurrences seem like they might be part of recurring phrases that would show up in bigram or trigram counts: law / nature ("natural law") state / nature ("natural state" "state of nature") etc.

Interesting that 'law' and 'laws' are usually distinct but have very high sentence co-occurrence. If comparison and contrast is a key part of the treatise (which I do not know well) then perhaps you might try separating all sentences containing only 'law' or 'laws' and then analyzing those two groups separately (if there is enough text to do this). Or try topic modeling....

Very good idea @jeremydouglass. I have coded a way to obtain short sentences from the text with the key word in the middle ("law", "laws", etc.). I can see the context now. This is the code:

And a screenshot of the output:

Very, very nice! And thanks so much for sharing it with the forum.

I'm not sure if anyone has ever implemented a word dendrogram for Processing, but these kinds of visualizations are excellent for text exploration of the roles specific keywords play in relation to other words. To increase the power of seeing keyword relationships in a dendrogram or markov chain visualization of what comes before and after "law", you can strip function words and stopwords out of your sentences before feeding them in.

Did you see the PI visualization recently?

You might order your word in a circle and connect those that appear in one sentence with a line and make the line thicker with more sentences they share

Here is a link to the Pi visualization that @Chrisir mentioned.

This form of visualization in called a chord diagram:

With text chord diagrams tend to be more legible with smaller sets of words, often filtered by type (e.g. only nouns as they co-occur in sentences, or only verbs, et cetera).

Pi visualization is very nice. Thanks for the reference. I will try to do the same thing with the words. I don't know if it will be equally clear with 20 or 50 words, instead of 10 digits. The dedrogram is also a good idea, and perhaps more clear. In fact, I was triying to code an arc diagram in Processing, like this one made with Protovis

http://mbostock.github.io/protovis/ex/arc.html

but it is not very convincing for me. Now, I am triying to use the 2D Array from the first code to make an ordered matrix to show regularities. Something like the Jacques Bertin's physical matrices

http://www.aviz.fr/diyMatrix/

I must confess that it is proving difficult to find out the code to order the columns and rows of the matrix. If I get it right I will share it with you.

Thanks again for your valuable ideas. You go beyond a simple help with the code. It is very kind of you.

@Rayle -- interesting idea about the arc diagram. When you say "it is not very convincing for me" I think I might agree -- I find that both arc diagrams and sometimes non-ribbon chord diagrams

tend to give a general impression of "this is complicated!" rather than being easy to read and conveying details of the data structure. Being able to see the ribbon thickness is what makes them useful to me.

So, for example, adding ribbon thickness to a basic arc diagram

...like in the Bible visualization arc diagram by Philipp Steinweber and Andreas Koller.

Advanced chord diagram systems like Circos have lots of ideas about how you can add details to make complex systems more readable....

http://circos.ca/

...but at a basic level, having a limited number of nodes and varying the thickness of the ribbons seems like the most important thing for indicating relationships in a way that viewers can easily understand.

Hi everyone,

An other form of graph that can be use to render the result is the Force-directed Graph.

It is used to show the co-occurence of things. It can be messy, but it can also look pretty cool !

@jb4x -- Interesting idea. Force-directed graphs don't always work well for actually understanding sentence word data (as you say, messy) but they can be done in Processing -- an example:

With the help of jb4x I made this two images, inspired by PI visualization. They are very nice. Notwithstanding, as jeremydouglass said, it seems too complicated. Maybe changing the thickness of the ribbon could be the solution, or putting different colors. These graphs offer a different information from de matrix: the conections are between two consecutive words. They are more related with the evolution of the texts than with their inner meaning. Anyway, they are nice and give us some clues to discover the similarities and differences between authors.

They are beautiful, @Raye!

A quick improvement to make comparative reading easier (if that is the goal) would be to change the radial sorting order to alphabetical, rather than sorting by descending frequency.

Then the word sequence would be sorted in the same order, and e.g. "power" would have the same color in both images (purple), regardless of arc size, making visual comparisons between the two arc sizes much easier (more power in Locke). This would also make it easier for viewers to visual look up relationships, e.g. "god <-> man" without as much visual hunting.

I might also suggest printing the radial keywords in white with a color-coordinated underline or a colored dot next it. You really have to squint to see e.g. "law" in Hobbes.

Changing the colors was easy. I'm afraid that sorting the words alphabetically takes a little more time.

Very nice! Love the color palette.

Using a fixed ordering (such as alphabetical) rather than ordering by descending frequency is mainly important for comparison between two images of different data -- looking up terms is a secondary benefit.

If you sort your beginning anchor points by ending destination along each arc then the collections of lines will more strongly resemble ribbons, even if you don't change anything else about how the lines are rendered. Everything will appear less wispy and more banded.

The renders look really nice, very well done ! :-bd

As you wrote, there are 2 limitations with that way of rendering. The first one is the fact that it is hard to read and the second is that, as we discussed, some data are hidden because you use the progression of the text and miss some co-occurence link if there are more than 2 words in a sentence.

For the first point I think that the solution of changing the thickness of the ribbon, as you wrote, would be the way to go.

For the second point, I don't really have a concrete idea yet but maybe you should treat first the sentence that have 2 words in it, then the one that have 3 words, then the one that have 4 etc. This way you can have full control over the render. Another thought is to play with ribbon transparency. Let's say you have a thick ribbon connecting "law" to "nature". Maybe you have 40% of those "law" that are also connected to "laws" so you you have another ribbon with a 40% thickness compare to the other one on overlay to show that link.

Very inspiring ideas. I will try some of them: a new sorting method and ribbons with different thikness. Don't forget the original code to make the graphs is from jb4x. Thanks to his suggestions I made only slighty modifications on his orginal idea.

Since the goal is to show the similarities between authors that are usually considered very different, (something like the reference jeremydouglass gave us: similardiversity.net/, I think it is possible to give up some information without problem.

Thanks to both of you.

Re: original code by @jb4x -- ah, yes, of course, the PI visualization code.

Yes, for that original PI visualization sorting wouldn't make any sense -- if I understand that one right, the whole point is to see the way that random distributions of digit correspondences form a texture, like a diaphanous surface.

However, in your case, you want to see arc-to-arc relationships, like in Circos, so sorting makes a lot more sense. The order in which digits occur in PI is the randomness itself, which is the subject of that viz -- but the order in which individual bigrams occur in your source text doesn't have anything to do with the co-occurrence ratios you are interested in, and it partially hides those ratios.

I didn't implement arc sorting for the original PI viz, and I don't know what your updated code looks like, but here is a simple example of a data structure that creates a sorted correspondence list for each arc in the PI code -- there are 10 IntLists, 0-9, and each one contains bands of arc destinations, sorted 0-9.

The only updates I made in the PI viz code were to transform the words into a String of numbers, (like PI), each number corresponding to a word. I just needed to

loadTablewith the correspondences to return the word afterwards.I will try with your code. Thanks.