Howdy, Stranger!

We are about to switch to a new forum software. Until then we have removed the registration on this forum.

Categories

- All Categories 25.7K

- Announcements & Guidelines 13

- Common Questions 30

- Using Processing 22.1K

- Programming Questions 12.2K

- Questions about Code 6.4K

- How To... 4.2K

- Hello Processing 72

- GLSL / Shaders 292

- Library Questions 4K

- Hardware, Integration & Other Languages 2.7K

- Kinect 668

- Arduino 1K

- Raspberry PI 188

- Questions about Modes 2K

- Android Mode 1.3K

- JavaScript Mode 413

- Python Mode 205

- Questions about Tools 100

- Espanol 5

- Developing Processing 548

- Create & Announce Libraries 211

- Create & Announce Modes 19

- Create & Announce Tools 29

- Summer of Code 2018 93

- Rails Girls Summer of Code 2017 3

- Summer of Code 2017 49

- Summer of Code 2016 4

- Summer of Code 2015 40

- Summer of Code 2014 22

- p5.js 1.6K

- p5.js Programming Questions 947

- p5.js Library Questions 315

- p5.js Development Questions 31

- General 1.4K

- Events & Opportunities 288

- General Discussion 365

Data crawler for dynamic variable on webpage (regex syntax)

Hey all,

So I am trying to make a data crawler that looks for a specific (dynamic)variable on a webpage, and is able to be manipulated through conditionals, etc in Processing.

i've gotten up to the point where I download the page as a file.htm , and then load the string within Processing as such:

String lines[] = loadStrings("heat.htm");

println("there are " + lines.length + " lines");

for (int i = 0 ; i < lines.length; i++) {

println(lines[i]);

}

I am having trouble knowing where to go from here on accessing the specific variable. Here is the main source page I am trying to scrape from: nba.com/gameline/heat/

ive found Daniel Shiffmans tutorial on the built in functions in Processing on parsing with regex:

shiffman.net/2011/12/22/night-3-regular-expressions-in-processing/

but this is a bit daunting for me and i'm not sure how to go about tackling this.

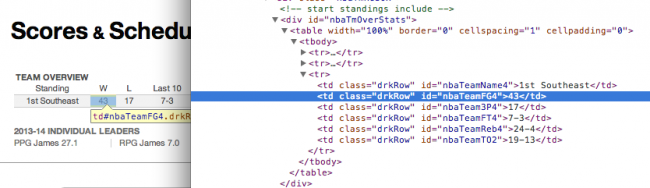

Attached below is the variable (the amount of wins for the Miami Heat thus far) I am trying to access and where it is nested in within the html content of the page.

Answers

You should start out creating a custom class which would represent the 6 columns of a row as fields.

Then you gotta find out the table's pattern within that ".htm" file.

Once you find a line w/

<tr>in it, you know the next 6 represent the values you need to assign to the fields of your class.It's the value between

">&</td>. You just have to devise a parser algorithm to extract that. %%-Great! ill report back with my attempt.

i've been at this without really any progress. I am struggling to really understand what you mean exacty in your suggestion.

You gotta use String's methods:

http://download.java.net/jdk8/docs/api/java/lang/String.html

Like contains() & indexOf() for example, in order to identify the correct lines and extract the data! ~O)

RegEx-Pattern:

(?<=nbaTmOverStats.*?nbaTeamFG4\">)\d+I dont know how to implement this in Processing, but the Pattern should work. Your match should be exactly "43".

Link :)

If some1 happens to know RegEx, he/she can use match() or matchAll() functions:

http://processing.org/reference/match_.html

http://processing.org/reference/matchAll_.html

I attempted to take your suggestion (along with finding an example) but am still having a bit of a difficult time. Here is my attempt:

well... Maybe some progress... My sketch compiles but I get a patternsyntax error that I know is due to the regrex format for Java.

I am a bit uncertain on correcting this, despite reading up on regex in Java in correlation to html. Code below:

maybe cuz there's "\\d+", it should be "\d+" (one backslash). In your case, "\" means search for a "\"-char that's followed by some digits (the "+" means undefined length but at least one digit). This pattern would work if the HTML-Code contains "\43".

So, remove one backslash and maybe it works.

Link

it won't - java uses \ to escape certain characters - \n for instance. so if you want a plain \ you have to escape it, hence \\.

(this forum also appears to use \ to escape things. one on its own appears ok, two appears as a single one. to get those two above i had to type four)

m[0] is the whole matched pattern

m[1] is the field you want, the digits between the > and <

This is becoming a joke in Stackoverflow... Basically, when somebody asks "how can I parse HTML with regular expressions?", the answer is invariably: "Just don't do it this way!".

Regexes can be OK for simple cases, on a page you are sure won't vary. But they tend to fail as soon as a webmaster change a bit the coding, even changing from " to ' for attributes values (or no quotes at all!) and so on.

For parsing HTML, you should use a specialized library able to handle all quirks HTML encoding can have (from permissive standards to coding errors tolerated by browsers!).

jSoup is often mentioned (with reason) in the Processing forums (old and new).

I agree at the unpredictability of websites.... But what isn't ? product API's change continuously with the wave, man ;)

I'll have jSoup a look at. Thanks!