Howdy, Stranger!

We are about to switch to a new forum software. Until then we have removed the registration on this forum.

Categories

- All Categories 25.7K

- Announcements & Guidelines 13

- Common Questions 30

- Using Processing 22.1K

- Programming Questions 12.2K

- Questions about Code 6.4K

- How To... 4.2K

- Hello Processing 72

- GLSL / Shaders 292

- Library Questions 4K

- Hardware, Integration & Other Languages 2.7K

- Kinect 668

- Arduino 1K

- Raspberry PI 188

- Questions about Modes 2K

- Android Mode 1.3K

- JavaScript Mode 413

- Python Mode 205

- Questions about Tools 100

- Espanol 5

- Developing Processing 548

- Create & Announce Libraries 211

- Create & Announce Modes 19

- Create & Announce Tools 29

- Summer of Code 2018 93

- Rails Girls Summer of Code 2017 3

- Summer of Code 2017 49

- Summer of Code 2016 4

- Summer of Code 2015 40

- Summer of Code 2014 22

- p5.js 1.6K

- p5.js Programming Questions 947

- p5.js Library Questions 315

- p5.js Development Questions 31

- General 1.4K

- Events & Opportunities 288

- General Discussion 365

About color extraction of an image of web camera, and translate it to sound.

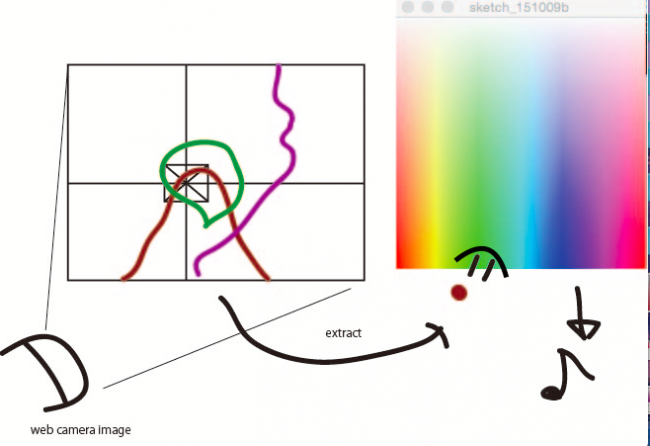

I want to extract color information from web camera image, and I want it translate to sound.

I have prepared the sound part as attached code, but I have no idea how to connect it to web camera.

The image is like attached.

Can anybody help me?

If you need more information, please let me know.

Tagged:

Answers

Color Music Code Example

import ddf.minim.analysis.*; import ddf.minim.*; import ddf.minim.signals.*;

Minim minim; AudioOutput out;

// 各音の周波数設定 final int note[]={262,294,330,349,392,440,494,523};

void setup() { size(255,255); minim = new Minim(this); // get a line out from Minim, default bufferSize is 1024, default sample rate is 44100, bit depth is 16 out = minim.getLineOut(Minim.STEREO);

// とりあえずの画像としてカラーチャートを描く。 colorMode(HSB,255); for(int i=0; i<255;i++){ for(int j=0;j<255;j++){ stroke(i,j,255); point(i,j); } }

// RGBよりも「色」として扱いやすいHSBにして、分解能を音の数に設定する colorMode(HSB,note.length); }

void draw() { }

// このメソッドに色を与えると対応した音を出す void playColor(color h){ MyNote newNote; newNote = new MyNote(note[(int)hue(h)], 0.2); }

// マウスクリックした点の色を拾い、playColorを呼び出す void mousePressed(){ color c=get(mouseX,mouseY); playColor(c); }

void stop() { out.close(); minim.stop(); super.stop(); }

class MyNote implements AudioSignal { private float freq; private float level; private float alph; private SineWave sine;

MyNote(float pitch, float amplitude) { freq = pitch; level = amplitude; sine = new SineWave(freq, level, out.sampleRate()); alph = 0.9; // Decay constant for the envelope out.addSignal(this); }

void updateLevel() { // Called once per buffer to decay the amplitude away level = level * alph; sine.setAmp(level);

// This also handles stopping this oscillator when its level is very low. if (level < 0.01) { out.removeSignal(this); } // this will lead to destruction of the object, since the only active // reference to it is from the LineOut }

void generate(float [] samp) { // generate the next buffer's worth of sinusoid sine.generate(samp); // decay the amplitude a little bit more updateLevel(); }

// AudioSignal requires both mono and stereo generate functions void generate(float [] sampL, float [] sampR) { sine.generate(sampL, sampR); updateLevel(); }

}

Color Tracking Code Example

import processing.video.*; Capture cap; color target = color(HSB, 255); // 追跡する色

void setup(){ size(640, 480); // 画面サイズ smooth(); // 描画を滑らかに String[] cams = Capture.list(); // 接続されている全カメラ名を取得 cap = new Capture(this, cams[0]); // カメラのキャプチャ cap.start(); }

void draw() { if (cap.available()){ // 変数の宣言 int x = width/2; // 追跡対象のx座標 int y = height/2; // 追跡対象のy座標 int dmax = 21; // 追跡対象判定の閾値 int xmin = width; // 追跡する画素のx座標最小値 int ymin = height; // 追跡する画素のx座標最大値 int xmax = 0; // 追跡する画素のy座標最小値 int ymax = 0; // 追跡する画素のy座標最大値 cap.read(); // カメラ映像の取得 image(cap, 0, 0); // カメラ映像の表示 // 色検出 for(int i = 0; i < width*height; i++){ // 目標の色との差を計算 float dr = abs( red(target) - red(cap.pixels[i]) ); float dg = abs( green(target) - green(cap.pixels[i]) ); float db = abs( blue(target) - blue(cap.pixels[i]) );

} }

// マウスクリックした画素の色を追跡対象にする void mousePressed(){ target = cap.pixels[mouseX+mouseY*width]; }

You'll get a better response if you format your code. Here's how:

http://forum.processing.org/two/discussion/8045/how-to-format-code-and-text

Thank you. I do that! Sorry for bothering you!!