Howdy, Stranger!

We are about to switch to a new forum software. Until then we have removed the registration on this forum.

-

Get Usermap for each user

We were able to figure it out, thank you for the help! The usermap can be indexed to let us know the userid and from there we can just mess around with the pixels

rgbImage = kinect.rgbImage(); userMap = kinect.userMap(); for (int i = 0; i < userMap.length; i++) { if (userMap[i] == 1) { rgbImage.pixels[i]=color(random(255) ); } else if (userMap[i] == 2) { rgbImage.pixels[i] = color(255,0,0); } } rgbImage.updatePixels(); image(rgbImage, 0, 0);Get Usermap for each userNot an expert in kinect but I have few questions:

Do you detect when a new user enter or leaves the scene? Do you get your messages from

onNewUser()/onLostUser()?What documentation are you using for kinect? The question is to relate

kinect.userMap()to each user as stored inkinect.getUsers(). I could suggest a solution by implementing your own userID assignation directly on your userMap pixel data (aka. detect your own users).... but no need to re-invent the wheel if it is already done for you by the library.Also what kinect device are you using? I could help me if you provide the specs.

Kf

Get Usermap for each userHi everyone,

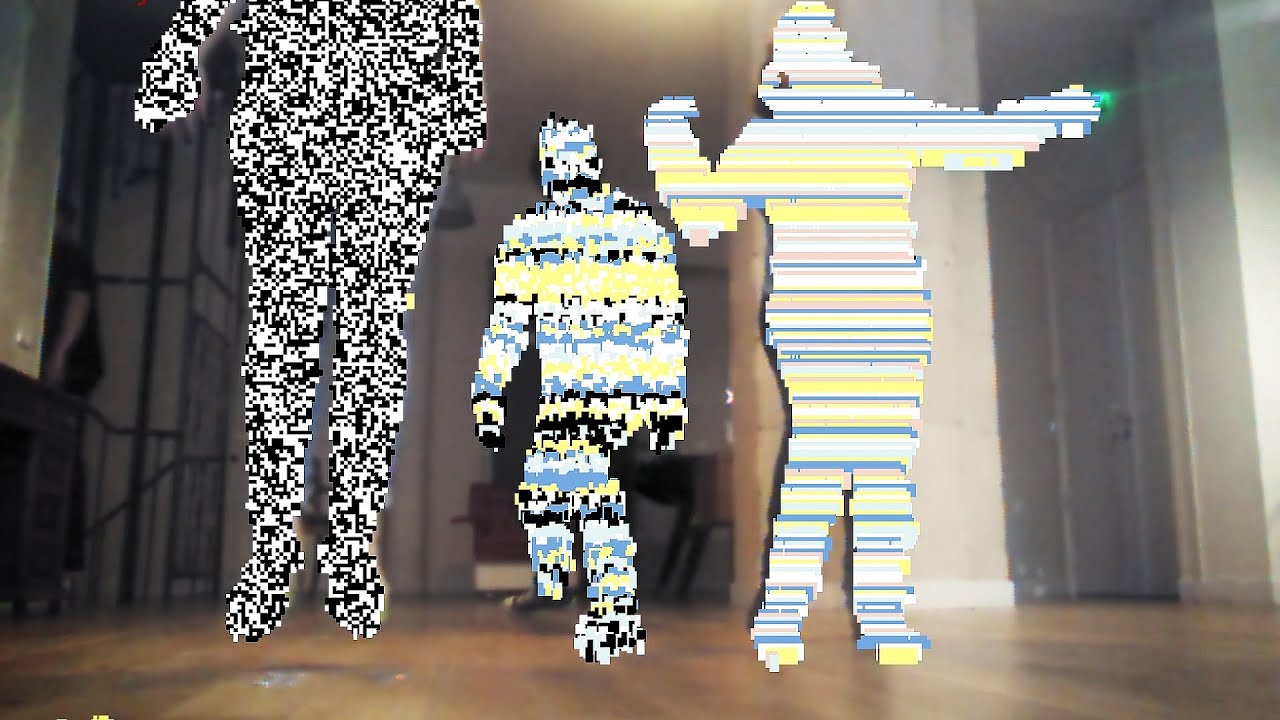

I'm trying to make a processing program where each person (silhouette) will be assigned a different glitch. Essentially something like this

Right now we can only assign the same glitch for each person.

This is our code:

class User { int userid; int glitchid; User(SimpleOpenNI curContext, int id) { glitchid = (int)random(1,2); userid = id; } User() { } } import processing.opengl.*; import SimpleOpenNI.*; SimpleOpenNI kinect; int userID; int[] userMap; int randomNum; float red; float green; float blue; PImage rgbImage; int count; ArrayList <User> users = new ArrayList <User> (); void setup() { size(640, 480, P3D); kinect = new SimpleOpenNI(this); kinect.setMirror(true); kinect.enableDepth(); kinect.enableUser(); kinect.enableRGB(); } void draw() { kinect.update(); rgbImage = kinect.rgbImage(); image(rgbImage, 0, 0); int[] userList = kinect.getUsers(); for(int i=0;i<userList.length;i++) { int glitch = users.get(i).glitchid; if (glitch == 1) { crt(); } else { crtcolor(); } } stroke(100); smooth(); } void onNewUser(SimpleOpenNI curContext, int userId) { println("onNewUser - userId: " + userId); User u = new User(curContext, userId); users.add(u); println("glitchid " + u.glitchid); curContext.startTrackingSkeleton(userId); } void onLostUser(SimpleOpenNI curContext, int userId) { println("onLostUser - userId: " + userId); } void crtcolor() { color randomColor = color(random(255), random(255), random(255), 255); boolean flag = false; if (kinect.getNumberOfUsers() > 0) { userMap = kinect.userMap(); loadPixels(); for (int x = 0; x < width; x++) { for (int y = 0; y < height; y++) { int loc = x + y * width; if (userMap[loc] !=0) { if (random(100) < 50 || (flag == true && random(100) < 80)) { flag = true; color pixelColor = pixels[loc]; float mixPercentage = .5 + random(50)/100; pixels[loc] = lerpColor(pixelColor, randomColor, mixPercentage); } else { flag = false; randomColor = color(random(255), random(255), random(255), 255); } } } } updatePixels(); } } void crt() { if (kinect.getNumberOfUsers() > 0) { userMap = kinect.userMap(); loadPixels(); for (int x = 0; x < width; x++) { for (int y = 0; y < height; y++) { int loc = x + y * width; if (userMap[loc] !=0) { pixels[loc] = color(random(255) ); } } } updatePixels(); } }I know that usermap will give me all the pixels and in my crt/crtcolor code i am changing all the pixels which is why it is not working. Is there a way/function that will give me the pixels associated with each user instead?

Problem with Screen for a Kinect User Depth Map Drawn with a ClassHello,

I created a Kinect user depth map and drew with a Point class (for manipulating the particles) that will be going into Resolume through Spout. The problem arose when I previewing the resulting code.

It seemed that there is another screen at the upper right screen.

I am not quite sure how to go around it.

The following are a part of the codes:

It seemed that there is another screen at the upper right screen.

I am not quite sure how to go around it.

The following are a part of the codes:void draw(){ bodyJam.update(); background(0,0,0); translate(width/2,height/2,0); rotateX(rotX); rotateY(rotY); scale(zoomF); strokeWeight(3); int[] userMap=bodyJam.userMap(); int[] depthMap=bodyJam.depthMap(); int steps=5; int index; PVector realWorldBlurp; translate(0,0,-1000); PVector[] realWorldMap=bodyJam.depthMapRealWorld(); beginShape(TRIANGLES); for(int i=0; i<nodes.size(); i++){ MovingNode currentNode = nodes.get(i); currentNode.setNumNeighbors( countNumNeighbors(currentNode,maxDistance) ); } for(int i=0; i<nodes.size(); i++){ MovingNode currentNode = nodes.get(i); if(currentNode.x > width || currentNode.x < 0 || currentNode.y > height || currentNode.y < 0){ nodes.remove(currentNode); } } for(int i = 0; i < nodes.size(); i++){ MovingNode currentNode = nodes.get(i); for(int j=0; j<currentNode.neighbors.size(); j++){ MovingNode neighborNode = currentNode.neighbors.get(j); } currentNode.display(); } for(int y=0;y<bodyJam.depthHeight();y+=steps){ for(int x=0;x<bodyJam.depthWidth();x+=steps){ index=x+y*bodyJam.depthWidth(); if(depthMap[index]>0){ translate(width/2,height/2,0); realWorldBlurp=realWorldMap[index]; if(userMap[index]==0) noStroke(); else addNewNode(realWorldBlurp.x,realWorldBlurp.y,realWorldBlurp.z,random(-dx,dx),random(-dx,dx)); } } } endShape(); spout.sendTexture(); } void addNewNode(float xPos, float yPos, float zPos, float dx, float dy){ MovingNode node = new MovingNode(xPos+dx,yPos+dy,zPos); node.setNumNeighbors( countNumNeighbors(node,maxDistance) ); if(node.numNeighbors < maxNeighbors){ nodes.add(node); } } int countNumNeighbors(MovingNode nodeA, float maxNeighborDistance){ int numNeighbors = 0; nodeA.clearNeighbors(); for(int i = 0; i < nodes.size(); i++){ MovingNode nodeB = nodes.get(i); float distance = sqrt((nodeA.x-nodeB.x)*(nodeA.x-nodeB.x) + (nodeA.y-nodeB.y)*(nodeA.y-nodeB.y) + (nodeA.z-nodeB.z)*(nodeA.z-nodeB.z)); if(distance < maxNeighborDistance){ numNeighbors++; nodeA.addNeighbor(nodeB); } } return numNeighbors; }Some lines from the Point class are as following: MovingNode(float xPos, float yPos, float zPos){ x = xPos; y = yPos; z = zPos; numNeighbors = 0; neighbors = new ArrayList(); }

void display(){ move(); strokeWeight(3); stroke(200); point(x,y,z); } void move(){ xAccel = random(-accelValue,accelValue); yAccel = random(-accelValue,accelValue); zAccel = random(-accelValue,accelValue); xVel += xAccel; yVel += yAccel; zVel += zAccel; x += xVel; y += yVel; z += zVel; } void addNeighbor(MovingNode node){ neighbors.add(node); } void setNumNeighbors(int num){ numNeighbors = num; } void clearNeighbors(){ neighbors = new ArrayList<MovingNode>(); }Any help is much appreciated. Thank you

userMap questionsif i want to resize it, i just can use a this.resize(newWidth, newHeight),

I don't think you mean resizing the sketch. You can resize the image right when you draw it or the actual image buffer. For the former, you will use:

image(px,py,w,h)where the parameters of this function are defined at https://processing.org/reference/image_.htmlFor the latter case, you can copy your current image information (either PGraphics or PImage) into another buffer using either:

https://processing.org/reference/copy_.html

https://processing.org/reference/get_.htmlUnfortunately I can't help as much as it is not clear how kinect.userMap(); allows you to pick those pixels from your kinect image. You said:

i managed to isolate what pixel belong to what user

It is not clear in your first post how you did that. However, if what you say is true, then making a copy of the information from kinect into your g1 and g2 objects should be one of the steps.

Did you check previous posts?: https://forum.processing.org/two/search?Search=userMap

Also these posts are more relevant to your question: https://forum.processing.org/two/discussion/comment/7502/#Comment_7502

https://forum.processing.org/two/discussion/comment/12206/#Comment_12206

https://forum.processing.org/two/discussion/11302/multiple-users-in-kinect-processing/p1Kf

userMap questions@kfrajer is guess my questions were not that clear, or i couldn't understand your answers.

the userMap() function is a function that stores the number of users detected by the kinect and it's stored in a int[] array. it is managed under the simpleopenni hood, that manages active users. so in my global variables i just declared int[] userMap. Through this function in the draw i acessed some hidden data that knows what user owns what pixel, so if it's user1, fill it with PGraphics1 and so on. it proved to be faster than scanning each and every pixel of the screen.

if i understand what you said, the 'lone' loadPixels() is loading all the screen, transforming the pixels and updating the screen. i've tried using bgG.loadPixels() but then the changes were irrevocable, while this lone way it preserves the backround from any change.

the second part of the question is kinda of reverse of the answer you sent me. i want to create an PGraphics out of each user pixel data, to assign user2 pixels to a user2 PGraphic and then do something of it.

userMap questionsThis is to access your loadPixels question:

Line 12 loads pixels from your (current) sketch. So for example:

void draw() { image(img,0,0,width,height); loadPixels(); for(int i=0;i<img.width*img.height;i++){ pixels[i]=red(pixels[i]) > 100 ? 255 : 0; } }In the above example, pixels array contain the values loaded by loadPixels. Since I draw an image in my sketch, then pixels will have the pixels of my image.

image(img,0,0,width,height); fill(255,0,0); ellipse(width/2,height/2,50,50); loadPixels();In the above example, the pixels array will contain the image and the ellipse, which is drawn right after the image.

For the second question, accessing pixels in a PGraphics.

Check this https://forum.processing.org/two/discussion/20761/set-part-of-pgraphics-transparent#latest

Side comments

Unfortunately I am not familiar with

userMap=kinect.userMap();If this function returns all the pixels from your device, then you will need to split the image into different PGraphics.Related to your code above:

for (int i = 0; i < userMap.length; i++) { switch(userMap[i]) { case 1: pixels[i]=g1.pixels[i]; break; case 2: pixels[i]=g2.pixels[i]; break; } }It is not proper. You example will run fine if PGraphics g1(and g2) has the same size as your current sketch. The size of your current sketch is defined in setup. I cannot help much at this point. Check your references about userMap() and also check if the library provides examples of how to access this user data. By the way, what type of object is userMap in line 8?

Kf

userMap questionsI have a doubt on how to access userMap() data and how to manipulate it and convert it to a PGraphics for further manipulation. I did the following code, it works nicely, it differentiates different users(user1, user 2) and fills each silhouette with a different PGraphics. bgG is a PGraphic that i used to fill the background.

void draw() { image(bgG, 0, 0); kinect.update(); if (kinect.getNumberOfUsers() > 0) { depthValues=kinect.depthMap(); userMap=kinect.userMap(); g1.loadPixels(); g2.loadPixels(); loadPixels(); for (int i = 0; i < userMap.length; i++) { switch(userMap[i]) { case 1: pixels[i]=g1.pixels[i]; break; case 2: pixels[i]=g2.pixels[i]; break; } } updatePixels(); } }my questions are: 1. what pixels i'm loading with loadPixels() 2. how can I access each user pixels and use them as mask, or how can i have them converted to a PGraphics or PImage?

My goal is to isolate each user and manipulate their pixel data separately.

thank you

How and through what has processing libraries can we put a background that changes color over time ?Hello all, I have a question: How and through what has processing libraries can we put a background that changes color over time with Kinect. I work with a group on a project to explain what augmented reality, being amateur we have chosen to work with the help of the Kinect and processing to achieve a small program. If you can help us thank you in advance.

The code :

import SimpleOpenNI.*;

import java.util.*;

SimpleOpenNI context;

int blob_array[];

int userCurID;

int cont_length = 640*480;

String[] sampletext = { "lu", "ma" , "me", "ve", "sa", "di", "week", "end" , "b", "c", "le"

}; // sample random text

void setup(){

size(640, 480);

context = new SimpleOpenNI(this);

context.setMirror(true);

context.enableDepth();

context.enableUser();

blob_array=new int[cont_length];

}

void draw() {

background(-1);

context.update();int[] depthValues = context.depthMap();

int[] userMap =null;

int userCount = context.getNumberOfUsers();

if (userCount > 0) {

userMap = context.userMap();

}

loadPixels();

background(255,260,150);for (int y=0; y<context.depthHeight(); y+=35) {

for (int x=0; x<context.depthWidth(); x+=35) {

int index = x + y * context.depthWidth();

if (userMap != null && userMap[index] > 0) { userCurID = userMap[index]; blob_array[index] = 255; fill(150,200,30); text(sampletext[int(random(0,10))],x,y); // put your sample random text } else { blob_array[index]=0; } } }}

How to put a background that changes with time with kinect ?Like this ?

import SimpleOpenNI.*;

import java.util.*;

SimpleOpenNI context;

int blob_array[];

int userCurID;

int cont_length = 640*480;

String[] sampletext = { "lu", "ma" , "me", "ve", "sa", "di", "week", "end" , "b", "c", "le"

}; // sample random text

void setup(){

size(640, 480);

context = new SimpleOpenNI(this);

context.setMirror(true);

context.enableDepth();

context.enableUser();

blob_array=new int[cont_length];

}

void draw() {

background(-1);

context.update();

int[] depthValues = context.depthMap();

int[] userMap =null;

int userCount = context.getNumberOfUsers();

if (userCount > 0) {

userMap = context.userMap();

}

loadPixels();

background(255,260,150);

for (int y=0; y<context.depthHeight(); y+=35) {

for (int x=0; x<context.depthWidth(); x+=35) {

int index = x + y * context.depthWidth();

if (userMap != null && userMap[index] > 0) {

userCurID = userMap[index];

blob_array[index] = 255;

fill(150,200,30);

text(sampletext[int(random(0,10))],x,y); // put your sample random text

}

else {

blob_array[index]=0;

}

}

}

}

I want to combine two processing code for the kinect but it's doesn't work. I don't understand why ?- import SimpleOpenNI.*; import java.util.*; SimpleOpenNI context; int blob_array[]; int userCurID; int cont_length = 640*480; String[] sampletext = { "lu", "ma" , "me", "ve", "sa", "di", "week", "end" , "b", "c", "le" }; // sample random text void setup(){ size(640, 480); context = new SimpleOpenNI(this); context.setMirror(true); context.enableDepth(); context.enableUser(); blob_array=new int[cont_length]; } void draw() { background(-1); context.update(); int[] depthValues = context.depthMap(); int[] userMap =null; int userCount = context.getNumberOfUsers(); if (userCount > 0) { userMap = context.userMap(); } loadPixels(); background(255,260,150); for (int y=0; y<context.depthHeight(); y+=35) { for (int x=0; x<context.depthWidth(); x+=35) { int index = x + y * context.depthWidth(); if (userMap != null && userMap[index] > 0) { userCurID = userMap[index]; blob_array[index] = 255; fill(150,200,30); text(sampletext[int(random(0,10))],x,y); // put your sample random text } else { blob_array[index]=0; } } } }

I want to combine two processing code for the kinect but it's doesn't work. I don't understand why ?<p>import SimpleOpenNI.<em>;

import java.util.</em>;</p>

<p>SimpleOpenNI context;</p>

<p>int blob<em>array[];

int userCurID;

int cont</em>length = 640*480;

String[] sampletext = { "lu", "ma" , "me", "ve", "sa", "di", "week", "end" , "b", "c", "le"

}; // sample random text</p>

<p>void setup(){</p>

<p>size(640, 480);

context = new SimpleOpenNI(this);

context.setMirror(true);

context.enableDepth();

context.enableUser();</p>

<p>blob<em>array=new int[cont</em>length];

}</p>

<p>void draw() {</p>

<p>background(-1);

context.update();

int[] depthValues = context.depthMap();

int[] userMap =null;

int userCount = context.getNumberOfUsers();

if (userCount > 0) {

userMap = context.userMap();</p>

<p>}</p>

<p>loadPixels();

background(255,260,150);

for (int y=0; y<context.depthHeight(); y+=35) {

for (int x=0; x<context.depthWidth(); x+=35) {

int index = x + y * context.depthWidth();

if (userMap != null && userMap[index] > 0) { <br />

userCurID = userMap[index]; <br />

blob_array[index] = 255;

fill(150,200,30);

text(sampletext[int(random(0,10))],x,y); // put your sample random text</p>

<pre><code> }

else {

blob_array[index]=0;

}

}

}

</code></pre>

<p>}</p>

I want to combine two processing code for the kinect but it's doesn't work. I don't understand why ?import SimpleOpenNI.; import java.util.;

SimpleOpenNI context;

int blobarray[]; int userCurID; int contlength = 640*480; String[] sampletext = { "lu", "ma" , "me", "ve", "sa", "di", "week", "end" , "b", "c", "le" }; // sample random text

void setup(){

size(640, 480); context = new SimpleOpenNI(this); context.setMirror(true); context.enableDepth(); context.enableUser();

blobarray=new int[contlength]; }

void draw() {

background(-1); context.update(); int[] depthValues = context.depthMap(); int[] userMap =null; int userCount = context.getNumberOfUsers(); if (userCount > 0) { userMap = context.userMap();

}

loadPixels(); background(255,260,150); for (int y=0; y 0) {

userCurID = userMap[index];

blob_array[index] = 255; fill(150,200,30); text(sampletext[int(random(0,10))],x,y); // put your sample random text} else { blob_array[index]=0; } } }}

I want to combine two processing code for the kinect but it's doesn't work. I don't understand why ?import SimpleOpenNI.; import java.util.;

SimpleOpenNI context;

int blobarray[]; int userCurID; int contlength = 640*480; String[] sampletext = { "lu", "ma" , "me", "ve", "sa", "di", "week", "end" , "b", "c", "le" }; // sample random text

void setup(){

size(640, 480); context = new SimpleOpenNI(this); context.setMirror(true); context.enableDepth(); context.enableUser();

blobarray=new int[contlength]; }

void draw() {

background(-1); context.update(); int[] depthValues = context.depthMap(); int[] userMap =null; int userCount = context.getNumberOfUsers(); if (userCount > 0) { userMap = context.userMap();

}

loadPixels(); background(255,260,150); for (int y=0; y 0) { userCurID = userMap[index]; blob_array[index] = 255; fill(150,200,30); text(sampletext[int(random(0,10))],x,y); // put your sample random text

} else { blob_array[index]=0; } }} }

I want to combine two processing code for the kinect but it's doesn't work. I don't understand why ?import SimpleOpenNI.; import java.util.;

SimpleOpenNI context;

int blobarray[]; int userCurID; int contlength = 640*480; String[] sampletext = { "lu", "ma" , "me", "ve", "sa", "di", "week", "end" , "b", "c", "le" }; // sample random text

void setup(){

size(640, 480); context = new SimpleOpenNI(this); context.setMirror(true); context.enableDepth(); context.enableUser();

blobarray=new int[contlength]; }

void draw() {

background(-1); context.update(); int[] depthValues = context.depthMap(); int[] userMap =null; int userCount = context.getNumberOfUsers(); if (userCount > 0) { userMap = context.userMap();

}

loadPixels(); background(255,260,150); for (int y=0; y 0) {

userCurID = userMap[index];

blob_array[index] = 255; fill(150,200,30); text(sampletext[int(random(0,10))],x,y); // put your sample random text} else { blob_array[index]=0; } } }}

How to put a background that changes with time with kinect ?import SimpleOpenNI.; import java.util.;

SimpleOpenNI context;

int blobarray[]; int userCurID; int contlength = 640*480; String[] sampletext = { "lu", "ma" , "me", "ve", "sa", "di", "week", "end" , "b", "c", "le" }; // sample random text

void setup(){

size(640, 480); context = new SimpleOpenNI(this); context.setMirror(true); context.enableDepth(); context.enableUser();

blobarray=new int[contlength]; }

void draw() {

background(-1); context.update(); int[] depthValues = context.depthMap(); int[] userMap =null; int userCount = context.getNumberOfUsers(); if (userCount > 0) { userMap = context.userMap();

}

loadPixels(); background(255,260,150); for (int y=0; y 0) { userCurID = userMap[index]; blob_array[index] = 255; fill(150,200,30); text(sampletext[int(random(0,10))],x,y); // put your sample random text

1 2 3 4 5 6 7 8 } else { blob_array[index]=0;

}} } }

How to put a background that changes with time with kinect ?Hello, Here is my code, I would want that the background is evolutionary in time: that he(it) varies of colors. You could help us we are amateur and begin with processing.

Thank you in advance.

import SimpleOpenNI.*; import java.util.*;

SimpleOpenNI context;

int blob_array[]; int userCurID; int cont_length = 640*480; String[] sampletext = { "lu", "ma" , "me", "ve", "sa", "di", "week", "end" , "b", "c", "le" }; // sample random text

void setup(){

size(640, 480); context = new SimpleOpenNI(this); context.setMirror(true); context.enableDepth(); context.enableUser();

blob_array=new int[cont_length]; }

void draw() {

background(-1); context.update(); int[] depthValues = context.depthMap(); int[] userMap =null; int userCount = context.getNumberOfUsers(); if (userCount > 0) { userMap = context.userMap();

}

loadPixels(); background(255,260,150); for (int y=0; y<context.depthHeight(); y+=35) { for (int x=0; x<context.depthWidth(); x+=35) { int index = x + y * context.depthWidth(); if (userMap != null && userMap[index] > 0) {

userCurID = userMap[index];

blob_array[index] = 255; fill(150,200,30); text(sampletext[int(random(0,10))],x,y); // put your sample random text} else { blob_array[index]=0; } } }}

I want to combine two processing code for the kinect but it's doesn't work. I don't understand why ?Hello I work with a group of work on a virtual reality project based on the kinect. I look for change the Background color as a function of time. We are amateur if you can help us would be with pleasure. Thank you beforehand

The first code : import SimpleOpenNI.*; import java.util.*;

SimpleOpenNI context;

int blob_array[]; int userCurID; int cont_length = 640*480; String[] sampletext = { "lu", "ma" , "me", "ve", "sa", "di", "week", "end" , "b", "c", "le" }; // sample random text

void setup(){

size(640, 480); context = new SimpleOpenNI(this); context.setMirror(true); context.enableDepth(); context.enableUser();

blob_array=new int[cont_length]; }

void draw() {

background(-1); context.update(); int[] depthValues = context.depthMap(); int[] userMap =null; int userCount = context.getNumberOfUsers(); if (userCount > 0) { userMap = context.userMap();

}

loadPixels(); background(255,260,150); for (int y=0; y<context.depthHeight(); y+=35) { for (int x=0; x<context.depthWidth(); x+=35) { int index = x + y * context.depthWidth(); if (userMap != null && userMap[index] > 0) {

userCurID = userMap[index];

blob_array[index] = 255; fill(150,200,30); text(sampletext[int(random(0,10))],x,y); // put your sample random text} else { blob_array[index]=0; } } }}

The second code: the sketch : // import libraries import processing.opengl.*; // opengl import SimpleOpenNI.*; // kinect import blobDetection.*; // blobs

// this is a regular java import so we can use and extend the polygon class (see PolygonBlob) import java.awt.Polygon;

// declare SimpleOpenNI object SimpleOpenNI context; // declare BlobDetection object BlobDetection theBlobDetection; // declare custom PolygonBlob object (see class for more info) PolygonBlob poly = new PolygonBlob();

// PImage to hold incoming imagery and smaller one for blob detection PImage cam, blobs; // the kinect's dimensions to be used later on for calculations int kinectWidth = 640; int kinectHeight = 480; // to center and rescale from 640x480 to higher custom resolutions float reScale;

// background color color bgColor; // three color palettes (artifact from me storing many interesting color palettes as strings in an external data file ;-) String[] palettes = { "-1117720,-13683658,-8410437,-9998215,-1849945,-5517090,-4250587,-14178341,-5804972,-3498634", "-67879,-9633503,-8858441,-144382,-4996094,-16604779,-588031", "-16711663,-13888933,-9029017,-5213092,-1787063,-11375744,-2167516,-15713402,-5389468,-2064585" };

// an array called flow of 2250 Particle objects (see Particle class) Particle[] flow = new Particle[2250]; // global variables to influence the movement of all particles float globalX, globalY;

void setup() { // it's possible to customize this, for example 1920x1080 size(1280, 720, OPENGL); // initialize SimpleOpenNI object context = new SimpleOpenNI(this); if (!context.enableDepth() || !context.enableUser()) { // if context.enableScene() returns false // then the Kinect is not working correctly // make sure the green light is blinking println("Kinect not connected!"); exit(); } else { // mirror the image to be more intuitive context.setMirror(true); // calculate the reScale value // currently it's rescaled to fill the complete width (cuts of top-bottom) // it's also possible to fill the complete height (leaves empty sides) reScale = (float) width / kinectWidth; // create a smaller blob image for speed and efficiency blobs = createImage(kinectWidth/3, kinectHeight/3, RGB); // initialize blob detection object to the blob image dimensions theBlobDetection = new BlobDetection(blobs.width, blobs.height); theBlobDetection.setThreshold(0.2); setupFlowfield(); } }

void draw() { // fading background noStroke(); fill(bgColor, 65); rect(0, 0, width, height); // update the SimpleOpenNI object context.update(); // put the image into a PImage cam = context.depthImage(); // copy the image into the smaller blob image blobs.copy(cam, 0, 0, cam.width, cam.height, 0, 0, blobs.width, blobs.height); // blur the blob image blobs.filter(BLUR); // detect the blobs theBlobDetection.computeBlobs(blobs.pixels); // clear the polygon (original functionality) poly.reset(); // create the polygon from the blobs (custom functionality, see class) poly.createPolygon(); drawFlowfield(); }

void setupFlowfield() { // set stroke weight (for particle display) to 2.5 strokeWeight(2.5); // initialize all particles in the flow for(int i=0; i<flow.length; i++) { flow[i] = new Particle(i/10000.0); } // set all colors randomly now setRandomColors(1); }

void drawFlowfield() { // center and reScale from Kinect to custom dimensions translate(0, (height-kinectHeight*reScale)/2); scale(reScale); // set global variables that influence the particle flow's movement globalX = noise(frameCount * 0.01) * width/2 + width/4; globalY = noise(frameCount * 0.005 + 5) * height; // update and display all particles in the flow for (Particle p : flow) { p.updateAndDisplay(); } // set the colors randomly every 240th frame setRandomColors(240); }

// sets the colors every nth frame void setRandomColors(int nthFrame) { if (frameCount % nthFrame == 0) { // turn a palette into a series of strings String[] paletteStrings = split(palettes[int(random(palettes.length))], ","); // turn strings into colors color[] colorPalette = new color[paletteStrings.length]; for (int i=0; i<paletteStrings.length; i++) { colorPalette[i] = int(paletteStrings[i]); } // set background color to first color from palette bgColor = colorPalette[0]; // set all particle colors randomly to color from palette (excluding first aka background color) for (int i=0; i<flow.length; i++) { flow[i].col = colorPalette[int(random(1, colorPalette.length))]; } } }

The particle class

// a basic noise-based moving particle class Particle { // unique id, (previous) position, speed float id, x, y, xp, yp, s, d; color col; // color

Particle(float id) { this.id = id; s = random(2, 6); // speed }

void updateAndDisplay() { // let it flow, end with a new x and y position id += 0.01; d = (noise(id, x/globalY, y/globalY)-0.5)globalX; x += cos(radians(d))s; y += sin(radians(d))*s;

// constrain to boundaries if (x<-10) x=xp=kinectWidth+10; if (x>kinectWidth+10) x=xp=-10; if (y<-10) y=yp=kinectHeight+10; if (y>kinectHeight+10) y=yp=-10; // if there is a polygon (more than 0 points) if (poly.npoints > 0) { // if this particle is outside the polygon if (!poly.contains(x, y)) { // while it is outside the polygon while(!poly.contains(x, y)) { // randomize x and y x = random(kinectWidth); y = random(kinectHeight); } // set previous x and y, to this x and y xp=x; yp=y; } } // individual particle color stroke(col); // line from previous to current position line(xp, yp, x, y); // set previous to current position xp=x; yp=y;} }

The PolygonBlob class :

// an extended polygon class with my own customized createPolygon() method (feel free to improve!) class PolygonBlob extends Polygon {

// took me some time to make this method fully self-sufficient // now it works quite well in creating a correct polygon from a person's blob // of course many thanks to v3ga, because the library already does a lot of the work void createPolygon() { // an arrayList... of arrayLists... of PVectors // the arrayLists of PVectors are basically the person's contours (almost but not completely in a polygon-correct order) ArrayList<ArrayList> contours = new ArrayList<ArrayList>(); // helpful variables to keep track of the selected contour and point (start/end point) int selectedContour = 0; int selectedPoint = 0;

// create contours from blobs // go over all the detected blobs for (int n=0 ; n<theBlobDetection.getBlobNb(); n++) { Blob b = theBlobDetection.getBlob(n); // for each substantial blob... if (b != null && b.getEdgeNb() > 100) { // create a new contour arrayList of PVectors ArrayList<PVector> contour = new ArrayList<PVector>(); // go over all the edges in the blob for (int m=0; m<b.getEdgeNb(); m++) { // get the edgeVertices of the edge EdgeVertex eA = b.getEdgeVertexA(m); EdgeVertex eB = b.getEdgeVertexB(m); // if both ain't null... if (eA != null && eB != null) { // get next and previous edgeVertexA EdgeVertex fn = b.getEdgeVertexA((m+1) % b.getEdgeNb()); EdgeVertex fp = b.getEdgeVertexA((max(0, m-1))); // calculate distance between vertexA and next and previous edgeVertexA respectively // positions are multiplied by kinect dimensions because the blob library returns normalized values float dn = dist(eA.x*kinectWidth, eA.y*kinectHeight, fn.x*kinectWidth, fn.y*kinectHeight); float dp = dist(eA.x*kinectWidth, eA.y*kinectHeight, fp.x*kinectWidth, fp.y*kinectHeight); // if either distance is bigger than 15 if (dn > 15 || dp > 15) { // if the current contour size is bigger than zero if (contour.size() > 0) { // add final point contour.add(new PVector(eB.x*kinectWidth, eB.y*kinectHeight)); // add current contour to the arrayList contours.add(contour); // start a new contour arrayList contour = new ArrayList<PVector>(); // if the current contour size is 0 (aka it's a new list) } else { // add the point to the list contour.add(new PVector(eA.x*kinectWidth, eA.y*kinectHeight)); } // if both distance are smaller than 15 (aka the points are close) } else { // add the point to the list contour.add(new PVector(eA.x*kinectWidth, eA.y*kinectHeight)); } } } } } // at this point in the code we have a list of contours (aka an arrayList of arrayLists of PVectors) // now we need to sort those contours into a correct polygon. To do this we need two things: // 1. The correct order of contours // 2. The correct direction of each contour // as long as there are contours left... while (contours.size() > 0) { // find next contour float distance = 999999999; // if there are already points in the polygon if (npoints > 0) { // use the polygon's last point as a starting point PVector lastPoint = new PVector(xpoints[npoints-1], ypoints[npoints-1]); // go over all contours for (int i=0; i<contours.size(); i++) { ArrayList<PVector> c = contours.get(i); // get the contour's first point PVector fp = c.get(0); // get the contour's last point PVector lp = c.get(c.size()-1); // if the distance between the current contour's first point and the polygon's last point is smaller than distance if (fp.dist(lastPoint) < distance) { // set distance to this distance distance = fp.dist(lastPoint); // set this as the selected contour selectedContour = i; // set selectedPoint to 0 (which signals first point) selectedPoint = 0; } // if the distance between the current contour's last point and the polygon's last point is smaller than distance if (lp.dist(lastPoint) < distance) { // set distance to this distance distance = lp.dist(lastPoint); // set this as the selected contour selectedContour = i; // set selectedPoint to 1 (which signals last point) selectedPoint = 1; } } // if the polygon is still empty } else { // use a starting point in the lower-right PVector closestPoint = new PVector(width, height); // go over all contours for (int i=0; i<contours.size(); i++) { ArrayList<PVector> c = contours.get(i); // get the contour's first point PVector fp = c.get(0); // get the contour's last point PVector lp = c.get(c.size()-1); // if the first point is in the lowest 5 pixels of the (kinect) screen and more to the left than the current closestPoint if (fp.y > kinectHeight-5 && fp.x < closestPoint.x) { // set closestPoint to first point closestPoint = fp; // set this as the selected contour selectedContour = i; // set selectedPoint to 0 (which signals first point) selectedPoint = 0; } // if the last point is in the lowest 5 pixels of the (kinect) screen and more to the left than the current closestPoint if (lp.y > kinectHeight-5 && lp.x < closestPoint.y) { // set closestPoint to last point closestPoint = lp; // set this as the selected contour selectedContour = i; // set selectedPoint to 1 (which signals last point) selectedPoint = 1; } } } // add contour to polygon ArrayList<PVector> contour = contours.get(selectedContour); // if selectedPoint is bigger than zero (aka last point) then reverse the arrayList of points if (selectedPoint > 0) { java.util.Collections.reverse(contour); } // add all the points in the contour to the polygon for (PVector p : contour) { addPoint(int(p.x), int(p.y)); } // remove this contour from the list of contours contours.remove(selectedContour); // the while loop above makes all of this code loop until the number of contours is zero // at that time all the points in all the contours have been added to the polygon... in the correct order (hopefully) }} }

// import libraries import processing.opengl.*; // opengl import SimpleOpenNI.*; // kinect import blobDetection.*; // blobs import SimpleOpenNI.*; import java.util.*;

// declare SimpleOpenNI object SimpleOpenNI context;

// background color color bgColor; // three color palettes (artifact from me storing many interesting color palettes as strings in an external data file ;-) String[] palettes = { "-1117720,-13683658,-8410437,-9998215,-1849945,-5517090,-4250587,-14178341,-5804972,-3498634", "-67879,-9633503,-8858441,-144382,-4996094,-16604779,-588031", "-16711663,-13888933,-9029017,-5213092,-1787063,-11375744,-2167516,-15713402,-5389468,-2064585" };

int blob_array[]; int userCurID; int cont_length = 640*480; String[] sampletext = { "lu", "ma" , "me", "ve", "sa", "di", "week", "end" , "b", "c", "le" }; // sample random text

void setup() { // it's possible to customize this, for example 1920x1080 size(1280, 720, OPENGL); // initialize SimpleOpenNI object context = new SimpleOpenNI(this); if (!context.enableDepth() || !context.enableUser()) { // if context.enableScene() returns false // then the Kinect is not working correctly // make sure the green light is blinking println("Kinect not connected!"); exit(); } else { // mirror the image to be more intuitive context.setMirror(true); // calculate the reScale value // currently it's rescaled to fill the complete width (cuts of top-bottom) // it's also possible to fill the complete height (leaves empty sides) reScale = (float) width / kinectWidth; // create a smaller blob image for speed and efficiency } }

void draw() { // fading background noStroke(); fill(bgColor, 65); rect(0, 0, width, height);

background(-1); context.update();int[] depthValues = context.depthMap(); int[] userMap =null; int userCount = context.getNumberOfUsers(); if (userCount > 0) { userMap = context.userMap(); } }

// sets the colors every nth frame void setRandomColors(int nthFrame) {

// turn strings into colors color[] colorPalette = new color[paletteStrings.length]; for (int i=0; i<paletteStrings.length; i++) { colorPalette[i] = int(paletteStrings[i]); } // set background color to first color from palette bgColor = colorPalette[0]; }loadPixels();

for (int y=0; y<context.depthHeight(); y+=35) { for (int x=0; x<context.depthWidth(); x+=35) { int index = x + y * context.depthWidth(); if (userMap != null && userMap[index] > 0) {

userCurID = userMap[index];

blob_array[index] = 255; fill(150,200,30); text(sampletext[int(random(0,10))],x,y); // put your sample random text} else { blob_array[index]=0; } } }}

SimpleOpenNi,pixels(),Array coordinatesi made this sketch,and i want to mke the ellipse interact with the userDepthMap of the kinect,i tried to find the coordinates of the array but it doesnt seem to work.Im using processing 2+ and SimpleOpenNi 1.96.I will be thankfull for any help.

Here is the sketch

`import processing.opengl.*; import SimpleOpenNI.*;

SimpleOpenNI kinect;

PImage userImage; int userID; int[] userMap;

PVector location = new PVector(100, 100); PVector velocity = new PVector(2.5, 5);

PVector _v; int _Xvalue; int _Yvalue;

PImage rgbImage;

void setup() { size(640, 480, OPENGL);

kinect = new SimpleOpenNI(this); kinect.enableDepth(); kinect.enableUser(); }

void draw() { background(0);

kinect.update();

if (kinect.getNumberOfUsers() > 0) {

userMap = kinect.userMap(); loadPixels(); for (int i = 0; i < userMap.length; i++) { if (userMap[i] != 0) { pixels[i] = color(56, 56, random(255)); } } updatePixels();location.add(velocity);

for (int i = 0; i < userMap.length; i++) { int y = userMap[i] / width; int x = userMap[i] % width;

_Xvalue = x; _Yvalue = y;

PVector _v = new PVector(_Xvalue, _Yvalue);

if(userMap[i] != 0 && location.x ==_v.x){ velocity.x = velocity.x * -1; } if(userMap[i] != 0 && location.y ==_v.y){ velocity.y = velocity.y * -1; } }

if ((location.x > width) || (location.x < 0) ) { velocity.x = velocity.x * -1; } if ((location.y > height) || (location.y < 0) ) { velocity.y = velocity.y * -1; } }

stroke(0); fill(175);

ellipse(location.x, location.y, 16, 16); }

void onNewUse(int uID) { userID = uID; println("tracking"); } `

How do I make this full screen or at least increase the dimensions?I'll try and keep this short and snappy. I have this code that will give me a simple black silhouette on Processing 2.0 using the Kinect unfortunately it's stuck at 640 x 480. I know this is because the Kinect can only detect things at this size but I really need to make the dimensions and size of this larger. Maybe not necessarily full screen but at least 1280x720.

I am a beginner of a beginner at all this and it is actually just for a graphic design project at uni that I'm doing it. So try and keep it basic so my simple mind can understand.

import SimpleOpenNI.*; SimpleOpenNI context; int[] userMap; PImage rgbImage; PImage userImage; color pixelColor; void setup() { size(640, 480); context = new SimpleOpenNI(this); context.enableRGB(); context.enableDepth(); context.enableUser(); userImage = createImage(width, height, RGB); } void draw() { background(255,255,255); context.update(); rgbImage=context.rgbImage(); userMap=context.userMap(); for(int y=0;y<context.depthHeight();y++){ for(int x=0;x<context.depthWidth();x++){ int index=x+y*640; if(userMap[index]!=0){ pixelColor=rgbImage.pixels[index]; userImage.pixels[index]=color(0,0,0); }else{ userImage.pixels[index]=color(255); } } } userImage.updatePixels(); image(userImage,0,0); }I'd also like to mention that I am using a v1 Kinect.

Any help would be so, so appreciated.

Dan