Howdy, Stranger!

We are about to switch to a new forum software. Until then we have removed the registration on this forum.

-

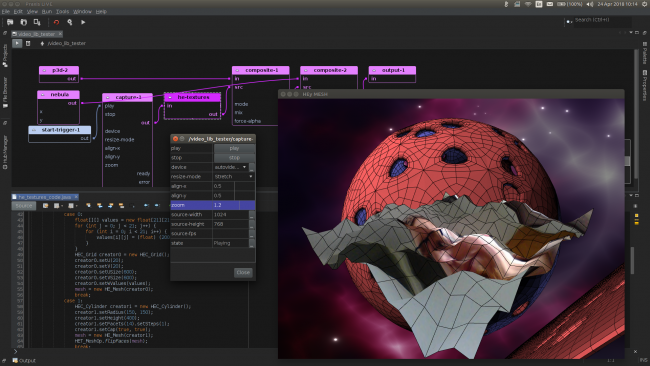

PraxisLIVE v4 released

PraxisLIVE is an open-source hybrid visual IDE and runtime for (live) creative coding. It offers a powerful alternative environment for working with the Processing library. Easily create projections, interactive spaces, custom VJ tools, sonic performance instruments, media for theatre; use it as a live digital sketchbook, test out ideas, experiment with code ...

Features include -

- Intuitive graphical node-based patching, where every component is similar to a Processing sketch - mix multiple sketches together without the overhead.

- Wide range of pre-built components.

- Real-time live programming - rewrite any component as it is running, without missing a frame, for live coding or faster creative development.

- Integrated GStreamer 1.x support with re-codeable playback and capture components.

- Unlimited number of pipelines, running locally or remotely - run in multiple processes for improved performance or crash-free multiple windows; run across multiple machines for large projects or live-coding a Pi.

- Audio library with JACK support, and ability to re-code synthesis down to the per-sample level.

- Integrated GUI, MIDI, TinkerForge, OSC and Syphon/Spout bindings.

- Threading done right - entirely lock-free communication between pipelines, locally and remotely; background resource loading and management.

- Compositing fixed - pre-multiplied alpha ensures correct blending across multiple transparent surfaces.

- Professional IDE base (Apache NetBeans) with full code-completion and support for Java 8 features.

- Fully FLOSS stack, including bundled OpenJDK on Windows and macOS.

Recent changes include -

- PraxisCORE runtime is now LGPL, with support for standalone projects.

- Support for using any Java libraries, and access to underlying Processing PApplet, images and graphics for library integration (eg. HE_Mesh above).

- Data root pipelines and improved reference handling for building custom background tasks, IO, passing around geometry data, etc.

- Most built-in components now re-codeable, including video composite, mixer, player and capture.

- Improved UI, with start page tasks for installing examples and additional components; updated graph UI (with bezier curves!)

Download from - https://www.praxislive.org

Online documentation (still being updated for v4) - http://praxis-live.readthedocs.ioAny Syphon Libraries?Hi,

I finally finished a sketch and now I want to put it in a projection mapping software. Most probably MadMapper. MadMapper excepts Syphon input. In order to view this in MadMapper in real-time, I was imagining to use a Syphon Library for p5.js or simply for js. Has anyone used Syphon with p5.js? Is there a library for that? I know that there is one for Processing and I tried it once but I have no idea about the p5.js. Any help regarding this issue is much appreciated.

Thank you Omer

OpenSansEmoji.ttf not working in P3D?So this has been driving me nuts all day. I was using OpenSansEmoji.ttf in a project and the emojis weren't showing up. Fast forward 6 hours later... I couldn't figure out why it was working in another test project until I saw that my main project needs the P3D in order to be able to use Syphon. My test project was not using the P3D renderer and it works there.

So now that I figured out why it wouldn't work, does anyone have any ideas on how to use this font AND Syphon? Or why this font won't render the emojis in P3D?

with second applet, strange errors about createGraphicsPGraphics canvas; void setup() { size(400, 400, P3D); canvas = createGraphics(400, 400, P3D); String[] args = {"YourSketchNameHere"}; SecondApplet sa = new SecondApplet(); PApplet.runSketch(args, sa); } void draw() { canvas.beginDraw(); canvas.background(127); canvas.lights(); canvas.translate(width/2, height/2); canvas.rotateX(frameCount * 0.01); canvas.rotateY(frameCount * 0.01); canvas.box(150); canvas.endDraw(); image(canvas, 0, 0); } public class SecondApplet extends PApplet { public void settings() { size(200, 100,P3D); } public void draw() { background(255); fill(0); ellipse(100, 50, 10, 10); } }With that code I get "createGraphics() requires size to use P3D or P2D" (paraphrasing)

Or if I try to create an instance of a Syphon window, for example, I get null pointer.

What's the deal? My goal is to create a 2-window app with Syphon output from one window and a P5 control setup on the other.

How do I send only part of sketch with SyphonHaha I just researched a bit both, thanks for the tip.

I just added extra space in the sketch to have room where to paste the copied pixels at the bottom of the sketch and managed to work around what I needed like this.

I'm sending the Syphon screen to Resolume, than in advanced setting I'm mapping the bottom copied section only with the screen chunk I originally needed.

How do I send only part of sketch with SyphonI don't really know anything about Syphon, but in general you can do stuff like this using

PGraphicscanvases, which you can create using thecreateGraphics()function.How do I send only part of sketch with SyphonThis might be a simple job but I've been searching and trying all kind of "simple" solutions but can't manage to send only part of the processing sketch through Syphon.

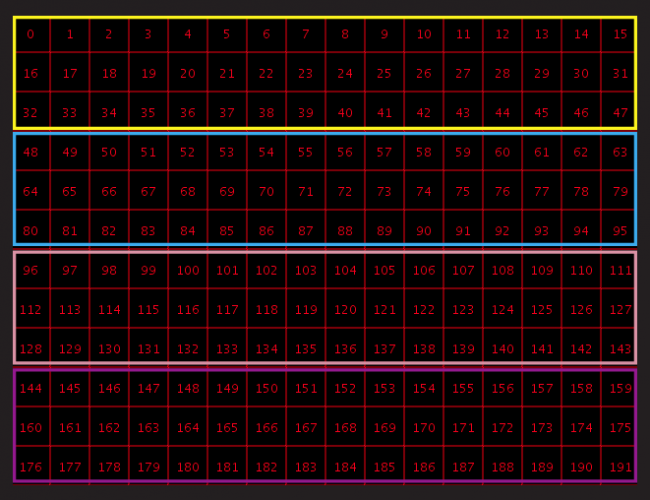

I have this grid in a sketch:

I only want to output one of the 4 coloured rectangles at a time according to a conditional statement but I just can't manage.

Syphon library won't install on Processing v2.2.1Have manually installed syphon and am now getting this message:

Syphon 1.0-RC3 by Andres Colubri http://interfaze.info/ 2018-02-28 21:23:25.911 java[834:367783] SYPHON DEBUG: SyphonClientConnectionManager: Registering for info updates 2018-02-28 21:23:25.978 java[834:367870] SYPHON DEBUG: SyphonClientConnectionManager: Registering for info updates 2018-02-28 21:23:25.978 java[834:367870] SYPHON DEBUG: SyphonClientConnectionManager: De-registering for info updates 2018-02-28 21:23:25.979 java[834:367870] SYPHON DEBUG: SyphonClientConnectionManager: Ending connection

Syphon library won't install on Processing v2.2.1Hi all I'm trying to install the Syphon library in Processing v2.2.1 but when I try it says it's starting but then nothing happens. Other libraries install correctly but Syphon just hangs. I need v.2.2.1 to run a kinect with isadora.

Thanks Ray

[multiple kinects] area sensorthank you all @TfGuy44 @kfrajer @jeremydouglass for your answers.

the situation is one more closely as the one described by jeremydouglass. it will be an empty 10x10m flat room, i don't know yet the height but it's about 5m to the ceiling grid, with people walking through it. if people are carrying boxes, bags or whatever is irrelevant. what i'm looking for as input is their x,y coordinates in the room.

i have done something like this a few years ago with 2 IR cameras positioned at the ceiling about 4 mts high, processed them with CCV, sent each blob centroid via TUIO to a processing sketch, did my processing thing and sent resulting image via syphon to resolume(two projectors with soft edge blending). https://instagram.com/p/f_M0OZJSua/?taken-by=eduzal

CCV has become abandoned ground, it's last update from 2011, and i'm looking for any solution that has those built in possibilities, like the kinect. i've found no literature for stitching kinects together or networking them, like make them work together to cover a given volume. i've found something that deals with mocap or 3D scanning but this is beyond my needs.

the mission i have now is to create a sound instalation in this given room, it intends to be a quadrophonic system with one speaker in each corner. i intend to manipulate recorded samples according to the position and amount of people in the room, like a reactable, and i'm looking for a solution for creating this input system.

thank you for your contributions.

processing + PGraphic + syphonHello all, I hope someone can help me i'm a bit of a newby and I'm really stuck.... I am creating graphics in Processing which I want to be able to layer up with other material using Resolume. I am using Syphon as a source server out of Processing and into Resolume but I can only do it by sending the SCREEN. This obviously includes a background even if I have a transparent one in Processing. I need to be able to pack classes and custom methods into PGraphics and send as Frames only..... agh I don't know what I'm doing... I've tried to follow advise from other posts but I haven't been able to apply them to my own code. PLEASE HELP!!!

`import codeanticode.syphon.*; import ddf.minim.*; import ddf.minim.analysis.*; Minim minim; // AudioPlayer song; AudioInput song; FFT fft; // Variables that define the "zones" of the spectrum // For example, for bass, we take only the first 4% of the total spectrum float specLow = 0.03; // 3% float specMid = 0.125; // 12.5% float specHi = 0.20; // 20% // This leaves 64% of the possible spectrum that will not be used. // These values are usually too high for the human ear anyway. // Scoring values for each zone float scoreLow = 0; float scoreMid = 0; float scoreHi = 0; // Previous value, to soften the reduction float oldScoreLow = scoreLow; float oldScoreMid = scoreMid; float oldScoreHi = scoreHi; // Softening value float scoreDecreaseRate = 125; // Cubes appearing in space int nbCubes; Cube[] cubes; //************************************************************ PGraphics canvas; SyphonServer server; //************************************************************* void setup() { size(1980, 1080, P3D); canvas = createGraphics(width, height, P3D); // Create syhpon server to send frames out. server = new SyphonServer(this, "Processing Syphon"); //Load the minim library minim = new Minim(this); //Load song // song = minim.loadFile("--"); //Get live input song = minim.getLineIn(Minim.MONO); //Create the FFT object to analyze the song fft = new FFT(song.bufferSize(), song.sampleRate()); fft.window(FFT.GAUSS); //One cube per frequency band nbCubes = (int)(fft.specSize()*specHi*1.25); cubes = new Cube[nbCubes]; //Create all objects //Create cubic objects for (int i = 0; i < nbCubes; i++) { cubes[i] = new Cube(); } } void draw() { //Forward the song. One draw () for each "frame" of the song ... fft.forward(song.mix); //Calculation of "scores" (power) for three categories of sound //First, save old values oldScoreLow = scoreLow; oldScoreMid = scoreMid; oldScoreHi = scoreHi; //Reset values scoreLow = 0; scoreMid = 0; scoreHi = 0; //Calculate the new "scores" for(int i = 0; i < fft.specSize()*specLow; i++) { scoreLow += fft.getBand(i); } for(int i = (int)(fft.specSize()*specLow); i < fft.specSize()*specMid; i++) { scoreMid += fft.getBand(i); } for(int i = (int)(fft.specSize()*specMid); i < fft.specSize()*specHi; i++) { scoreHi += fft.getBand(i); } //To slow down the descent. if (oldScoreLow > scoreLow) { scoreLow = oldScoreLow - scoreDecreaseRate; } if (oldScoreMid > scoreMid) { scoreMid = oldScoreMid - scoreDecreaseRate; } if (oldScoreHi > scoreHi) { scoreHi = oldScoreHi - scoreDecreaseRate; } //Volume for all frequencies at this time, with the highest sounds higher. //This allows the animation to go faster for the higher pitched sounds, which is more noticeable float scoreGlobal = scoreLow*0.5 + 0.7*scoreMid + 1*scoreHi; //Subtle color of background // background(scoreLow/100, scoreMid/200, scoreHi/500, 50); //Cube for each frequency band for(int i = 0; i < nbCubes; i++) { //Value of the frequency band float bandValue = fft.getBand(i); //The color is represented as: red for bass, green for medium sounds and blue for high. //The opacity is determined by the volume of the tape and the overall volume. cubes[i].display(scoreLow, scoreMid*2, scoreHi*5, bandValue*2, scoreGlobal); } // canvas.beginDraw(); // // canvas.background(127); // canvas.clear(); // canvas.lights(); // canvas.translate(width/2, height/2); // canvas.rotateX(frameCount * 0.01); // canvas.rotateY(frameCount * 0.01); // canvas.box(150); // canvas.endDraw(); // image(canvas, 0, 0); // server.sendImage(canvas);//I need to send to PGraphics so I can have a transparent background server.sendScreen(); } //Class for cubes floating in space class Cube { //Z position of spawn and maximum Z position float startingZ = -10000; float maxZ = 1000; //Position values float x, y, z; float rotX, rotY, rotZ; float sumRotX, sumRotY, sumRotZ; //builder Cube() { //Make the cube appear in a random place x = random(0, width); y = random(0, height); z = random(startingZ, maxZ); //Give the cube a random rotation rotX = random(0, 1); rotY = random(0, 1); rotZ = random(0, 1); } void display(float scoreLow, float scoreMid, float scoreHi, float intensity, float scoreGlobal) { //Color selection, opacity determined by intensity (volume of the tape) color displayColor = color(scoreLow, scoreMid*2, scoreHi*5, intensity*5); fill(displayColor, 255); //Color lines, they disappear with the individual intensity of the cube color strokeColor = color(255, 150-(20*intensity)); stroke(strokeColor); strokeWeight(0.5 + (scoreGlobal/300)); //Creating a transformation matrix to perform rotations, enlargements pushMatrix(); //Shifting translate(x, y, z); //Calculation of the rotation according to the intensity for the cube sumRotX += intensity*(rotX/1000); sumRotY += intensity*(rotY/1000); sumRotZ += intensity*(rotZ/1000); //Application of the rotation rotateX(sumRotX); rotateY(sumRotY); rotateZ(sumRotZ); //Creation of the box, variable size according to the intensity for the cube box(100+(intensity/2)); //Application of the matrix popMatrix(); //Z displacement z+= (1+(intensity/5)+(pow((scoreGlobal/150), 2))); //Replace the box at the back when it is no longer visible if (z >= maxZ) { x = random(0, width); y = random(0, height); z = startingZ; } } } `Pixel Flow - SpoutWhat about syphon/spout the fluid image OUT? i haven't been able to get that to work in processing.

Using syphon to connect processing with the Kinect to a VR wrapperHello processing community, recently i see some projects (that one is a exemple:

) where the Kinect is transmitting the data to a VR in real time.

) where the Kinect is transmitting the data to a VR in real time.I discovery that Syphon may do this connection. but never works with before and the only VR platform that i works was the Android mode for Processing. i could not discover how to do the connection with syphon, even with the github repository https://github.com/Syphon which is really complete. but im quite new at coding, and have some issues to understand how it works.

i think if Unity will be a Software where i can support the Kinect data to a VR...

Any help or another ways to solve this will be very appreciated. if you already work with syphon may you can show how to use it, even for a quite different propose.

And thanks a lot for all the support that this community has been making, to me e to everyone how wants to know about it!

Port get busy after a few times running. Is it because of P3D?Hi,

I have come to a problem of having error from using Window 7 with arduino Leonado sending data through Serial port. I tested a code that getting only the data from both Mac and Window and it works fine. Then I make a video to put on a canvas for Spout to project it out to other program. Note that with syphon everything works fine too. I have ran into a post somewhere that posted long ago that a guy had the same problem of mine and he fixed it by changing to openGL but I also learned that openGL now is the same as P3D from a more recent post here in this forum. ** I didn't run anything at the same time of processing.** First time running usually fine, but for the second or third then the error will say that my port is busy! And I will have no choice but restart the computer again. !!!! Is this a bug? Is there a solution?

Here is the code that runs fine on both operation

import processing.serial.*; Serial myPort ; int value, wheel; String myString = null; int weight = 800; int lf = 10; void setup() { printArray(Serial.list()); myPort = new Serial(this, Serial.list()[1], 9600); myPort.clear(); myPort.bufferUntil(lf); myString = myPort.readStringUntil(lf); } void draw() { println("in draw once"); println("fsr: "+ value); println("wheel position: "+ wheel); println("-----------"); } void serialEvent(Serial p) { //println("access Serial port"); myString = p.readStringUntil('\n'); if (myString !=null) { //println("get data from Serial port"); String[] fsr =split(myString, 'r'); //printArray(fsr); value = int(fsr[0]); String trim = trim(fsr[1]); wheel = int(trim); } }And this is a code that has a problem in Window 7 (x64)

import spout.*;// IMPORT THE SPOUT LIBRARY import processing.serial.*;//Serial to Arduino import processing.video.*;//play vdo int nSenders = 5; PGraphics[] canvas; Spout[] senders; int lf=10; Serial myPort; int value, wheel; String myString = null; Movie myMovie; int arduinoValue = 800; int projectorWidth = 1024; int projectorHeight = 768; //int scalar = 3; //-----swtiches------// Boolean arduinoSwitch = true; Boolean sensorSwitch = false; Boolean spoutSwitch = true; //-----------// void settings() { size(projectorWidth, projectorHeight,P3D); // size is doesn't change the size of canvas in term of sending graphic signal out. } void setup() { canvas = new PGraphics[nSenders]; for (int i=0; i<nSenders; i++) { canvas[i] = createGraphics(projectorWidth, projectorHeight, P3D); } printArray(Serial.list()); // Create Spout senders to send frames out. if (spoutSwitch) { senders = new Spout[nSenders]; for (int i = 0; i < nSenders; i++) { senders[i] = new Spout(this); String sendername = "Test "+i; senders[i].createSender(sendername, projectorWidth, projectorHeight); } } if (arduinoSwitch) { myPort = new Serial(this, Serial.list()[1], 9600); myPort.clear(); myPort.bufferUntil(lf); myString = myPort.readStringUntil(lf); } myMovie = new Movie (this, "C:/Users/dma/Desktop/tuang/test1.mp4"); } void draw() { canvas[0].beginDraw(); canvas[0].background(255, 0, 100); canvas[0].image(myMovie, 0, 0); if (spoutSwitch) { for (int i = 0; i < nSenders; i++) { senders[i].sendTexture(canvas[i]); //send all separated spout canvases } } if (arduinoSwitch) { println("fsr: "+value); println("position wheel: "+wheel); if (value < arduinoValue) { sensorSwitch=true; myMovie.stop(); canvas[0].background(0); println("nothing in the house"); } if (sensorSwitch) { if (value>=arduinoValue) { println("Force sensor is detacted"); //newRandom=true; //randomMovie(); myMovie.play(); sensorSwitch=false; } } } canvas[0].endDraw(); image(canvas[0], 1024/2*(0%2), 768/2*(0/2)); } void movieEvent(Movie m) { m.read(); } void serialEvent(Serial p) { if (arduinoSwitch) { myString = p.readStringUntil('\n'); if (myString != null) { String[] fsr = split(myString, 'r'); //printArray(fsr); value = int(fsr[0]); String trim = trim(fsr[1]); wheel = int(trim); } } else { println("Arduino is off."); } } //keyboard for testing vdo void keyPressed() { switch (key) { case 'a' : myMovie.play(); break; case 'r' : myMovie.stop(); break; } }What should I do to stop the port being too busy ! I didn't even run anything but processing.

How should I make video stop lagging when plays and changes to another?Hi,

I am working on a project that involves a lot of videos files and interaction. I have a problem when combining the sketches I did separately into one standalone program and make it as smooth as it was when played separately. That means each of the sketches was played fine until they are now combined. I am curious that the problem comes from the order of code lines and how to call the video. So I made it loads the video files first and plays them when pressing a button on a selected name ( change String). The frame rate I put is frameRate(60) at the end of setup() due to the gifAnimation library I use to play with gif images. I've tried 30 it is slightly lagging but I think it is actually fine; however, the videos still have a spasm.

Also, in this program, there is a lot of information that has been load first and receiving in real-time. I am working with API from twitter and Tumblr, and detecting Arduino sensors. (The videos I'm having a problem with is not live tho.) Will this can be the one causes the program of video lagging?

Idk which part of the code should I put in here so I attach the setup() and draw() here:

void settings() { PJOGL.profile=1; if (install == true) { fullScreen(SPAN); } else { size((projectorWidth*3)/scalar, projectorHeight/scalar, P3D); } } void setup() { canvas = createGraphics((projectorWidth*3)/scalar, projectorHeight/scalar, P3D); server = new SyphonServer(this, "Processing Syphon"); //--------/// if (!debugging) { myPort = new Serial(this, Serial.list()[7], 9600); // Open whatever port is the one you're using for arduino. myPort.clear(); myString = myPort.readStringUntil(lf); myString = null; } //Youtubue loadMovies(); //not live from website. it just grabs from internal folder. // Instagram runGetRecentMediaForUserChoreo(); //Twitter twitterFont = createFont("Helvetica Neu Bold", 30); runTweetsChoreo(); // Run the Tweets Choreo function getTweetFromJSON(); // Parse the JSON response //tumblr runRetrievePostsWithTagChoreo(); searchJSON(); frameRate(60); } void draw() { //Loading Screen Code// switch(startPhase) { case 0: //println("startPhase: "+startPhase); thread("FilesLoading"); //Use thread() to load files or do other tasks that take time. When // the task is finished, set a variable that indicates the task is complete, and check that from inside your draw() method. startPhase++; return; case 1: //call function to load files. //println("startPhase: "+startPhase); Loadingtxt(); return; case 2: //currently loading files. canvas.beginDraw(); if (!debugging) { while (myPort.available() > 0) { myString = myPort.readStringUntil(lf); if (myString != null) { myString = trim(myString); value = int(myString); println(value); if (value <275) { sensorSwitch=true; myMovie[num].stop(); background(0); } if (sensorSwitch) { if (value>=275) { println("Force sensor is detacted"); newRandom=true; randomMovie(); sensorSwitch=false; } } } } if (value<275) { canvas.background(0); println("black background is on top."); } } canvas.textAlign(CENTER, CENTER); canvas.textSize(14); canvas.fill(255); canvas.text("Movie is not playing.", width/2, height/2); canvas.background(0); displayYoutube(); displayText(); displayPic(); displayTumblr(); displayGif(); /*if (!debugging) { if (value<275) { canvas.background(0); println("black background is on top."); } }*/ canvas.endDraw(); image(canvas, 0, 0); server.sendImage(canvas); randomMovie(); return; } }Thanks.

Syphone CodeSyphon, not Syphone? Harder to figure out what you need if you don't spell the library name correctly.*

The Syphon library comes with examples in the examples directory -- they are installed in PDE when you add the library. Have you looked at them? Have you tried them? https://github.com/Syphon/Processing/tree/master/examples

Here is an old tutorial: https://socram484.wordpress.com/2013/09/12/using-syphon-with-processing-into-madmapper/

Resolume into ProcessingAnyone know how to get Resolume Arena output into a processing sketch? Maybe through syphon? (i'm on a mac)

How Smooth out Noisy VideoRe:

There is trouble with the Syphon code when it comes to using a tint within a PGraphics "canvas" in a P3D sketch.

If you want help from the forum solving your Syphon / PGraphics.tint() bug: explain clearly what it is and provide short demo code.

How Smooth out Noisy VideoAlright. After much working this evening I have found that:

A) The tint() method works fine to do a little accumulation of previous frames. It does have some odd ghosting artifacts that are undesirable but end up working fine within the framework of OpenCV.

B) There is trouble with the Syphon code when it comes to using a tint within a PGraphics "canvas" in a P3D sketch.

Don't ask me why just yet all I know is that this is the only thorn sticking out. A thorn you reach about 1% from the finish line...

[HTTP-Requests] cookies - SPTrans Olho VivoHello

I'm building a visualization tool to get the immediate position of the buses available. There's this API that delivers these posions using a GET request. The return is a JSON.

I'm authenticating without problems, but when i request anything it blocks me any access. it's stinky documentation doesn't says that they need cookies to return the request.

The final product will be a mapped projection with this map, transported via syphon to another Resolume Arena. i need help in getting this cookie within this and processing it to get my requests.

import http.requests.*; String baseURL="http://"+"api.olhovivo.sptrans.com.br/v0"; String token="2bc28344ead24b3b5fe273ec7389d2e4d14abd2bad45abe6d8d774026193f894"; String route="7272"; void setup() { size(1200, 1800); //API Olho Vivo authentication(); getLinha(route); } void authentication() { String reqURL = "/Login/Autenticar?token="; PostRequest post = new PostRequest(baseURL+reqURL+token); post.send(); println("Reponse Content: " + post.getContent()); println("Reponse Content-Length Header: " + post.getHeader("Content-Length")); } void getLinha(String busLIne) { String reqURL = "/Posicao?codigoLinha="; GetRequest get = new GetRequest(baseURL+reqURL+busLine); get.send(); println("Reponse Content: " + get.getContent()); println("Reponse Content-Length Header: " + get.getHeader("Content-Length")); } Responses: Reponse Content: true Reponse Content-Length Header: 4 May 15, 2017 12:05:34 AM org.apache.http.impl.client.DefaultRequestDirector handleResponse WARNING: Authentication error: Unable to respond to any of these challenges: {} Reponse Content: {"Message":"Authorization has been denied for this request."} Reponse Content-Length Header: 61API documentation: http://www.sptrans.com.br/desenvolvedores/APIOlhoVivo/Documentacao.aspx?1