Howdy, Stranger!

We are about to switch to a new forum software. Until then we have removed the registration on this forum.

-

does processing 3.3.5 support skeleton tracking via kinect?

Update

Now Processing 3 is compatible with Kinect V2, link here

How to get skeleton tracking and body mask if is not library included?What a wonderful resource, @totovr76 -- your SimpleOpenni repository should be extremely helpful for people trying to put Kinect skeleton tracking into production.

How to get skeleton tracking and body mask if is not library included?Instructions

I set up the instructions in this repository

Hardware

- Kinect V1

- Kinect V2

Software

- Processing 2.2.1

- Processing 3.3.6

- Processing 3.3.7

Getting a skeletonProcessing 3.3.7, Kinect-V1 or V2, one Mac and SimpleOpenni library

SimpleOpenni with Processing 3.3.6 workingCan anyone get the library to work on mac? For me it never goes further then this line:

context = new SimpleOpenNI(this);Get Usermap for each userHi everyone,

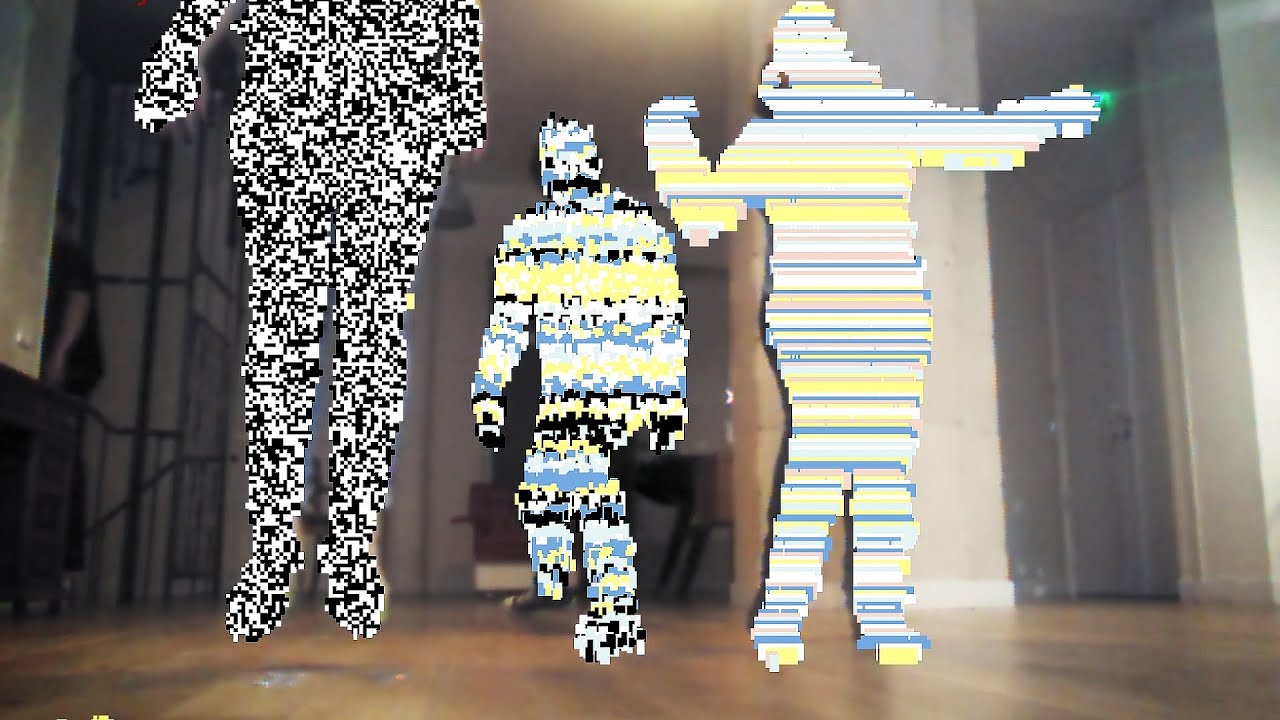

I'm trying to make a processing program where each person (silhouette) will be assigned a different glitch. Essentially something like this

Right now we can only assign the same glitch for each person.

This is our code:

class User { int userid; int glitchid; User(SimpleOpenNI curContext, int id) { glitchid = (int)random(1,2); userid = id; } User() { } } import processing.opengl.*; import SimpleOpenNI.*; SimpleOpenNI kinect; int userID; int[] userMap; int randomNum; float red; float green; float blue; PImage rgbImage; int count; ArrayList <User> users = new ArrayList <User> (); void setup() { size(640, 480, P3D); kinect = new SimpleOpenNI(this); kinect.setMirror(true); kinect.enableDepth(); kinect.enableUser(); kinect.enableRGB(); } void draw() { kinect.update(); rgbImage = kinect.rgbImage(); image(rgbImage, 0, 0); int[] userList = kinect.getUsers(); for(int i=0;i<userList.length;i++) { int glitch = users.get(i).glitchid; if (glitch == 1) { crt(); } else { crtcolor(); } } stroke(100); smooth(); } void onNewUser(SimpleOpenNI curContext, int userId) { println("onNewUser - userId: " + userId); User u = new User(curContext, userId); users.add(u); println("glitchid " + u.glitchid); curContext.startTrackingSkeleton(userId); } void onLostUser(SimpleOpenNI curContext, int userId) { println("onLostUser - userId: " + userId); } void crtcolor() { color randomColor = color(random(255), random(255), random(255), 255); boolean flag = false; if (kinect.getNumberOfUsers() > 0) { userMap = kinect.userMap(); loadPixels(); for (int x = 0; x < width; x++) { for (int y = 0; y < height; y++) { int loc = x + y * width; if (userMap[loc] !=0) { if (random(100) < 50 || (flag == true && random(100) < 80)) { flag = true; color pixelColor = pixels[loc]; float mixPercentage = .5 + random(50)/100; pixels[loc] = lerpColor(pixelColor, randomColor, mixPercentage); } else { flag = false; randomColor = color(random(255), random(255), random(255), 255); } } } } updatePixels(); } } void crt() { if (kinect.getNumberOfUsers() > 0) { userMap = kinect.userMap(); loadPixels(); for (int x = 0; x < width; x++) { for (int y = 0; y < height; y++) { int loc = x + y * width; if (userMap[loc] !=0) { pixels[loc] = color(random(255) ); } } } updatePixels(); } }I know that usermap will give me all the pixels and in my crt/crtcolor code i am changing all the pixels which is why it is not working. Is there a way/function that will give me the pixels associated with each user instead?

Getting a skeletonYears ago I managed to use Processing (1.5 ? 2 ? I can't say) + one of the kinect librairies (maybe SimpleOpenNi, that doesn't seem to ba available anymore) in order to get the joints of a skeleton. It worked fine at that time. This year, nothing works at all, or quite : I'm able to get a cloud of points with openKinect, but not on any operating system, just on Ubuntu (I also use Windows 7 and Windows 10). I'm quite lost, I tried many old drivers/sdk installations etc.

So my question is : can somebody who actually (and recently) managed to get a skeletton tell me : - which operating system was used - which version of Processing - which processing library (SimpleOpenNi, Kinect4WinSDK, OpenKinect, Kinect V2) - which driver or sdk was used

It would be a huge help.

Move a servo motor (Ax-12) with arduino, processing and kinecti also run your processing code. It did not run properly.

Try: (just rename the COM3 port

import SimpleOpenNI.*; import processing.serial.*; int pos=0; Serial myPort; SimpleOpenNI context; color[] userClr = new color[] { color(255, 0, 0), color(0, 255, 0), color(0, 0, 255), color(255, 255, 0), color(255, 0, 255), color(0, 255, 255) }; PVector com = new PVector(); PVector com2d = new PVector(); void setup() { size(640, 480); println(Serial.list()); // rename COM3 to the arduino port myPort = new Serial(this,"COM3", 9600); context = new SimpleOpenNI(this); if (context.isInit() == false) { println("Can't init SimpleOpenNI, maybe the camera is not connected!"); exit(); return; } // enable depthMap generation context.enableDepth(); // enable skeleton generation for all joints context.enableUser(); context.enableRGB(); background(200, 0, 0); stroke(0, 0, 255); strokeWeight(3); smooth(); } void draw() { // update the cam context.update(); // draw depthImageMap //image(context.depthImage(),0,0); image(context.userImage(), 0, 0); // draw the skeleton if it's available IntVector userList = new IntVector(); context.getUsers(userList); if (userList.size() > 0) { int userId = userList.get(0); drawSkeleton(userId); } } // draw the skeleton with the selected joints void drawSkeleton(int userId) { // aqui é definido qual parte do corpo vai rastrear PVector jointPos = new PVector(); context.getJointPositionSkeleton(userId, SimpleOpenNI.SKEL_HEAD, jointPos); PVector convertedHead = new PVector(); context.convertRealWorldToProjective(jointPos, convertedHead); //desenhar uma elipse sobre a parte do corpo rastreada fill(255, 0, 0); ellipse(convertedHead.x, convertedHead.y, 20, 20); //draw YOUR Right Shoulder PVector jointPosLS = new PVector(); context.getJointPositionSkeleton(userId, SimpleOpenNI.SKEL_LEFT_SHOULDER, jointPosLS); PVector convertedLS = new PVector(); context.convertRealWorldToProjective(jointPosLS, convertedLS); //int LS = convertedLS.x, convertedLS.y //draw YOUR Right Elbow PVector jointPosLE = new PVector(); context.getJointPositionSkeleton(userId, SimpleOpenNI.SKEL_LEFT_ELBOW, jointPosLE); PVector convertedLE = new PVector(); context.convertRealWorldToProjective(jointPosLE, convertedLE); fill(200, 200, 200); ellipse(convertedLE.x, convertedLE.y, 20, 20); //angulooo int anguloLSE =int(degrees(atan2(convertedLS.x - convertedLE.x, convertedLS.y - convertedLE.y))); println(anguloLSE); myPort.write(anguloLSE); //se quiser desenhar o esqueleto inteiro, descomentar as linhas abaixo context.drawLimb(userId, SimpleOpenNI.SKEL_HEAD, SimpleOpenNI.SKEL_NECK); context.drawLimb(userId, SimpleOpenNI.SKEL_NECK, SimpleOpenNI.SKEL_LEFT_SHOULDER); context.drawLimb(userId, SimpleOpenNI.SKEL_LEFT_SHOULDER, SimpleOpenNI.SKEL_LEFT_ELBOW); context.drawLimb(userId, SimpleOpenNI.SKEL_LEFT_ELBOW, SimpleOpenNI.SKEL_LEFT_HAND); context.drawLimb(userId, SimpleOpenNI.SKEL_NECK, SimpleOpenNI.SKEL_RIGHT_SHOULDER); context.drawLimb(userId, SimpleOpenNI.SKEL_RIGHT_SHOULDER, SimpleOpenNI.SKEL_RIGHT_ELBOW); context.drawLimb(userId, SimpleOpenNI.SKEL_RIGHT_ELBOW, SimpleOpenNI.SKEL_RIGHT_HAND); context.drawLimb(userId, SimpleOpenNI.SKEL_LEFT_SHOULDER, SimpleOpenNI.SKEL_TORSO); context.drawLimb(userId, SimpleOpenNI.SKEL_RIGHT_SHOULDER, SimpleOpenNI.SKEL_TORSO); context.drawLimb(userId, SimpleOpenNI.SKEL_TORSO, SimpleOpenNI.SKEL_LEFT_HIP); context.drawLimb(userId, SimpleOpenNI.SKEL_LEFT_HIP, SimpleOpenNI.SKEL_LEFT_KNEE); context.drawLimb(userId, SimpleOpenNI.SKEL_LEFT_KNEE, SimpleOpenNI.SKEL_LEFT_FOOT); context.drawLimb(userId, SimpleOpenNI.SKEL_TORSO, SimpleOpenNI.SKEL_RIGHT_HIP); context.drawLimb(userId, SimpleOpenNI.SKEL_RIGHT_HIP, SimpleOpenNI.SKEL_RIGHT_KNEE); context.drawLimb(userId, SimpleOpenNI.SKEL_RIGHT_KNEE, SimpleOpenNI.SKEL_RIGHT_FOOT); } // ----------------------------------------------------------------- // SimpleOpenNI events void onNewUser(SimpleOpenNI curContext, int userId) { println("onNewUser - userId: " + userId); println("\tstart tracking skeleton"); curContext.startTrackingSkeleton(userId); } void onLostUser(SimpleOpenNI curContext, int userId) { println("onLostUser - userId: " + userId); } void onVisibleUser(SimpleOpenNI curContext, int userId) { //println("onVisibleUser - userId: " + userId); } void keyPressed() { switch(key) { case ' ': context.setMirror(!context.mirror()); break; } }does processing 3.3.5 support skeleton tracking via kinect?SimpleOpenni is just working for V1, sorry! but you can try using this I could setup it in my Mac!

Skeleton Tracking Processing 3.3.6 OSXSkeleton Tracking Processing 3.3.6 OSXWoW amazing.

Yes what did you use as libraries and dependencies? How your sketch look? And I was wondering how to send all the joints through OSC?

I am trying this code that I tweaked a little:

`import SimpleOpenNI.*; SimpleOpenNI kinect;

PImage handImage;

void setup() { kinect = new SimpleOpenNI(this); kinect.enableDepth(); kinect.enableUser(); kinect.setMirror(false); kinect.enableRGB();

size(640, 480); smooth();

handImage = loadImage("Hand.png"); } void draw() {

kinect.update(); image(kinect.rgbImage(), 0, 0); IntVector userList = new IntVector(); kinect.getUsers(userList);

if (userList.size() > 0) { int userId = userList.get(0);

float rightArmAngle = getJointAngle(userId, SimpleOpenNI.SKEL_RIGHT_HAND, SimpleOpenNI.SKEL_RIGHT_ELBOW); PVector handPos = new PVector(); kinect.getJointPositionSkeleton(userId, SimpleOpenNI.SKEL_RIGHT_HAND, handPos); PVector convertedHandPos = new PVector(); kinect.convertRealWorldToProjective(handPos, convertedHandPos); //float newImageWidth = map(convertedHandPos.z, 500, 2000, handImage.width/6, handImage.width/12); //float newImageHeight = map(convertedHandPos.z, 500, 2000, handImage.height/6, handImage.height/12); float newImageWidth = convertedHandPos.z*0.1; float newImageHeight = convertedHandPos.z*0.2; newImageWidth = constrain(newImageWidth, 75, 100); pushMatrix(); translate(convertedHandPos.x, convertedHandPos.y); rotate(rightArmAngle*-1); rotate(PI/2); image(handImage, -1 * (newImageWidth/2), 0 - (newImageHeight/2), newImageWidth, newImageHeight); popMatrix();} }

float getJointAngle(int userId, int jointID1, int jointID2) { PVector joint1 = new PVector(); PVector joint2 = new PVector(); kinect.getJointPositionSkeleton(userId, jointID1, joint1); kinect.getJointPositionSkeleton(userId, jointID2, joint2); return atan2(joint1.y-joint2.y, joint1.x-joint2.x); }

// user-tracking callbacks: void onNewUser(int userId) { kinect.startTrackingSkeleton(userId);

}`

But I got this error message

The file "Hand.png" is missing or inaccessible, make sure the URL is valid or that the file has been added to your sketch and is readable. Isochronous transfer error: 1

If you guys have some ideas to help a newbie could be nice

Keep up the good work totovr

does processing 3.3.5 support skeleton tracking via kinect?Totovr76, i am a beginner of processing, i have add the library, and using kinect2, but display as follow:

SimpleOpenNI Error: Can't open device: DeviceOpen using default: no devices found

SimpleOpenNI not initialised SimpleOpenNI not initialised

can you help me to fix it?

Changing opacity of silhouettesHi, Using Daniel Shiffmana's MinMaxThreshold tutorial, I was able to change the colour from red to blue to green based on their distance to the Kinect. I would like to make a wall where when 2 people walk past each other, their silhouette colours mix. I tried to play with opacity with a background image but wouldn't mix 2 different silhouettes detected by kinect. Should I use blog detection to get the kinect to detect multiple people and how would I do this? I am using Kinect2 with Processing3 and seems like SimpleOpenNI doesn't work for Kinect2? Thanks!

Here's the code:

import org.openkinect.processing.*; // Kinect Library object Kinect2 kinect2; //float minThresh = 480; //float maxThresh = 830; PImage kin; PImage bg; void setup() { size(512, 424, P3D); kinect2 = new Kinect2(this); kinect2.initDepth(); kinect2.initDevice(); kin = createImage(kinect2.depthWidth, kinect2.depthHeight, RGB); bg = loadImage("1219690.jpg"); } void draw() { background(0); //loadPixels(); tint(255,254); image(bg,0,0); kin.loadPixels(); //minThresh = map(mouseX, 0, width, 0, 4500); //maxThresh = map(mouseY, 0, height, 0, 4500); // Get the raw depth as array of integers int[] depth = kinect2.getRawDepth(); //float sumX = 0; //float sumY = 0; //float totalPixels = 0; for (int x = 0; x < kinect2.depthWidth; x++) { for (int y = 0; y < kinect2.depthHeight; y++) { int offset = x + y * kinect2.depthWidth; int d = depth[offset]; //println(d); //delay (10); tint(255,127); if (d < 500) { kin.pixels[offset] = color(255, 0, 0); //sumX += x; //sumY += y; //totalPixels++; } else if (d > 500 && d<1000){ kin.pixels[offset] = color(0,255,0); } else if (d >1000 && d<1500){ kin.pixels[offset] = color(0,0,255); } else { kin.pixels[offset] = color(0); } } } kin.updatePixels(); image(kin, 0, 0); //float avgX = sumX / totalPixels; //float avgY = sumY / totalPixels; //fill(150,0,255); //ellipse(avgX, avgY, 64, 64); //fill(255); //textSize(32); //text(minThresh + " " + maxThresh, 10, 64); } Question:SimpleOpenNI, I would want to limit "range of detection".

Question:SimpleOpenNI, I would want to limit "range of detection".I am using kinect v1 and SimpleOpenNI.

I would only want to detect person in range of 1m~2m, but kinect would detects and tracks person entire time when the person is its range.

Here, I would want to know method to limit detective range of the kinect .

I would want to limit rage to 2m(width) x 2m(height), 2m(depth).

thank you m(__)m

does processing 3.3.5 support skeleton tracking via kinect?SimpleOpenni with Processing 3.3.6 workingI am trying it on Windows 7, but I get this error:

SimpleOpenNI Version 1.96 After initialization: SimpleOpenNI Error: Can't open device: DeviceOpen using default: no devices found Can't init SimpleOpenNI, maybe the camera is not connected!Why am I getting errors on my server/client data parsing? Why does my data seem to stop sending?I am currently trying to setup a LAN between two computers, to send motion tracking data from one location to another. My code appears to work for a random length of time (anything from 2 seconds up to a minute) I either get one of two errors on different lines of code: StringIndexOutofBoundsException: String index out of range: -1 OR ArrayIndexOutofBounds: 1 I have commented in the code which lines I am getting the errors on.

I really have no idea why this is happening - could it be something to do with the rate at which the data is being sent i.e. frameRate? Or am I doing something wrong when parsing the strings into arrays?

There doesn't seem to be any correlation between the data that it crashes on, all I can think of at the moment is that it is something to do with the data not communicating quickly enough? I tried it on a frameRate of 10, and it seemed to work for longer, but I'm not sure if that was just a coincidence. The data continues to be generated and sent from the server but the client stops responding.

Any help would be greatly appreciated.

This is the client code (the one it crashes on that needs work):

import processing.net.*; Client c; String input; String inputB; String data[]; float xArr[]; float yArr[]; void setup() { size(displayWidth, displayHeight); background(204); stroke(0); frameRate(10); // Slow it down a little // Connect to the server's IP address and port c = new Client(this, "192.168.0.2", 61514); // Replace with your server's IP and port } void draw() { // Receive data from server if (c.available() > 0) { input = c.readString(); println("Input"); println(input); inputB = input.substring(0, input.indexOf("\n")); // Only up to the newline - this is where the first error happens data = split(inputB, '#'); // Split values into an array println("Data"); printArray(data); xArr = float(split(data[0], ":")); yArr = float(split(data[1], ":")); //this is where the second error happens (if it gets past the first one) for(int i = 0; i < yArr.length ; i++){ strokeWeight(4); point(xArr[i],yArr[i]); } println("Arrays"); println(xArr); println(yArr); } //println(input); //println(data); }This is the server code:

//setting up the server import processing.net.*; Server s; Client c; String input; int data[]; //imports Kinect lib import SimpleOpenNI.*; //defines variable for kinect object SimpleOpenNI kinect; //declare variables for mapped x y values float x, y; //declare variable for number of people float peopleNum = 0; //initialise other variables that we might need int userId; float inches; int count = 0; //set up arrays for all values - current x/y, previous x/y, depth float[] oldXVals; float[] newXVals; float[] oldYVals; float[] newYVals; float[] storeDepth; void setup() { //set the display size to full screen size(displayWidth, displayHeight); //declares new kinect object kinect = new SimpleOpenNI(this); //enable depth image kinect.enableDepth(); //enable user detection kinect.enableUser(); frameRate(10); //set background to white background(255); //initialise starting array lengths oldXVals = new float[20]; newXVals = new float[20]; oldYVals = new float[20]; newYVals = new float[20]; storeDepth = new float[20]; //start a simple server on a port s = new Server(this, 61514); // Start a simple server on a port } void draw() { //updates depth image kinect.update(); //draws depth image - if we don't need this comment out //image(kinect.depthImage(),0,0,displayWidth,displayHeight); //array to store depth values int[] depthValues = kinect.depthMap(); //access all users currently available to us IntVector userList = new IntVector(); kinect.getUsers(userList); //sets variable peopleNum to the number of people currently detected by the kinect peopleNum = userList.size(); //change the length of the array to the number of people currently present newXVals = new float[int (peopleNum)]; newYVals = new float[int (peopleNum)]; storeDepth = new float[int (peopleNum)]; //for every user detected, do this for (int i = 0; i<userList.size (); i++) { //get the userId userId = userList.get(i); //declare PVector position to store x/y position PVector position = new PVector(); //get the position kinect.getCoM(userId, position); kinect.convertRealWorldToProjective(position, position); //calculate depth //find the position of the center of mass *640 to give us a number to find in depth array int comPosition = int(position.x) + (int(position.y) * 640); //locate it in the depth array int comDepth = depthValues[comPosition]; //calculate distance in inches inches = comDepth / 25.4; //map x and y coordinates to full screen x = map(position.x, 0, 640, 0, displayWidth); y = map(position.y, 0, 480, 0, displayHeight); //store current values for each user in arrays newXVals[i] = x; newYVals[i] = y; //store depth values in array for each user (just the current values) storeDepth[i] = inches; //DRAW THINGS HERE //To draw with only the current values, use newXVals and newYVals //i corresponds to position in array //setting these parameters means there is no "drop off" at the edges if (newXVals[i] >= 50 && newXVals[i] <= width - 50) { if (newYVals[i] >= 50 && newYVals[i] <= height - 50) //setting this parameter means that if a person is too close (less than 35 inches), they are ignored if (storeDepth[i] >= 35) { strokeWeight(4); point(newXVals[i], newYVals[i]); } } //prints each userId with its corresponding x/y values //println("User: " + userId + " xPos: " + x + " yPos: " + y + " depth: " + inches); //prints the number of people present continuously //println("There are " + numPeople + " people present"); } if(newXVals.length >= 1 && newYVals.length >= 1){ String[] newXValsString = nfc(newXVals, 1); //println(newXValsString); String joinedXVals = join(newXValsString, ":"); String[] newYValsString = nfc(newYVals, 1); //println(newYValsString); String joinedYVals = join(newYValsString, ":"); s.write(joinedXVals + "#" + joinedYVals + "\n"); println(joinedXVals + "#" + joinedYVals + "\n"); } //copy new value arrays to old value arrays arrayCopy(newXVals, oldXVals); arrayCopy(newYVals, oldYVals); }Kinect SimpleOpenNIYou can use this one:

Has anyone successfully exported a Processing app using Simple-OpenNI on Mac OS X?i have the same issue - when I copy the simpleOpenNI folder to the root dir, the application runs (I can tell the processing sketch is running as terminal displays console output) but I can't see anything as the screen is still grey.

I've tried your suggestion too @fubbi but nothing seemed to work. Did any one end up figuring it out?

userMap questions@kfrajer for the first question, i see that i'm managing the whole screen, so if i want to resize it, i just can use a this.resize(newWidth, newHeight), right?

as this is in a specific kinect forum, i believe people should be used to it. simpleopenni is an abandoned library, it's documentation is well under lousy, even the hardware itself has been discontinued by microsoft.

btu the real enigma is here. what you said here

I need to see this hidden data. I need to know the nature and dimensions of this data so to be able assign it to your PGraphics object. However, this is not clear from the example that you provided.

this is exactly my question. i managed to isolate what pixel belong to what user, and now i want to work with them separately, like remove the red values from user1 and remove green values of user2.